I’d been interested in the idea of learning machine learning for quite a while. This interest in the field started after I discovered ML as being a subfield of AI from an online forum. My understanding of AI before this was limited to what I watched in sci-fi movies, where AI is portrayed as an artificial human that could outperform real humans in intelligence, which I didn’t find interesting.

So when I came to learn that projects like the one that tests for cancer-causing phenomena—like Microsatellite Instability (MSI) in molecules of human tissues were done using ML—my interest was captured.

This motivated me to joined an AI club at my school. There I met students who were already solving problems with ML and were doing very well. They participated in the NASA space apps challenge every year, where they built solutions to issues related to Space and Earth using ML. There I was exposed to even more exciting projects and a lot of smart people who inspired me and made my interest in the field stronger. But I didn’t start learning it until a few years later.

By about the time I completed my third year in college, I decided to stop procrastinating, and I finally started my first MOOC in ML.

My interest in the topic soon began to drop after I started learning, largely because of the misconceptions I had going in. And coming from a programming background, I found machine learning to be different than what I’d imagined.

These were my misconceptions, and what I’ve come to realize. By the way, I am still new to ML, and I believe I still have a lot to learn about the field.

Judging too soon

The day I trained my first linear regression model, I suspected that there must be something I was missing. I wondered where I was supposed to apply the knowledge of mathematics, statistics, and computer science when all I needed was just three lines of code.

I had learned calculus in my first year, linear algebra in my second, and did some courses in statistics. I could remember that linear regression was just a topic in one of my statistics classes. I was fully prepared to learn machine learning, only to find out, at least at the moment, that I didn’t need to use any of this knowledge at all. I was not happy with that.

I knew, as each subsequent project passed, that I was copying and pasting code and not really gaining any innate knowledge. I found the iteration between reading the documentation of the Python package I used and working on my project irritating. I was put off by it.

I thus concluded that gaining prerequisite knowledge was pedantic, as I didn’t see them being directly used in training any model. I obviously knew what the models were made of, but since I couldn’t find a way to engage with those inner workings in my projects (coupled with the abstractness of training a model with Python) I lost interest.

Well, that was before I discovered “Exploratory Data Analysis” (EDA). EDA is defined by Wikipedia as a way of “analyzing data sets to summarize their main characteristics”. It’s simply a kind of calculation and visualization of data that makes you understand the data you’re dealing with.

You might be wondering whether I’d not encountered EDA in my first MOOC. The truth is, I had. But I only started to understand it properly once I experimented with it myself.

With EDA, I was able to discover a lot about data that I never did before, and this has been very useful in my ML projects now. I have come to understand that machine learning mostly revolves around the data you’re using for a project.

And playing with these data using EDA, you find insights that help you do a lot of things that require using that underlying math knowledge and skill I’d been excited to work with.

Here’s an example of something I learned with EDA that was exciting to me…

Outliers… finding out you have outliers in your data after doing a regression plot will lead to you thinking about whether to leave those outliers there (if it were an anomaly detection problem) or remove them from the dataset.

If you choose to leave the outliers, and it’s an anomaly detection problem, an understanding of the inner workings of Mean Absolute Error (MAE) and Mean Squared Error (MSE) will lead you to choose to use MSE over MAE because you’d know that MSE gives more weight to outliers — in this case, called novelties, which are needed because it takes the square of each error and is usually large for errors greater than 1.

However, if you leave the outliers in order to make the models able to generalize, you’ll choose MAE because it performs well with outliers.

This could lead to your discovering that MAE does not perform well with neural networks because its gradient is usually the same and can be large for small loss values, unlike MSE where the gradient reduces as you get closer to 0 loss, as such, it doesn’t perform well in the backpropagation step in neural networks.

This will lead you to then learn about the Huber loss function, which is a combination of both the MSE and the MAE functions, and which performs better than MSE on outliers. And when you cannot find it in the official Keras API, you end up writing the code for it from scratch.

On the other hand, replacing them will lead to your deciding on an appropriate method for replacing the outliers. You will have to decide on either using the Winsorising method which involves limiting extreme values (outliers) to the trimmed minimum and maximum percentile of your data, or binning which involves reducing numerical data into categorical bins.

This underlying complexity was appealing to me, it made me feel like I was solving a problem. I had always loved the convincing and precise approach with which problems were solved using mathematics. It’s usually factual and produces accurate results. And so when I’m able to dig in and find ways to engage with the math, I’m usually pleased not because of the math per se (i.e its complexity), but because it gives me so much control and so much confidence in what I’m doing because of its precision.

My developer mindset

Initially, I felt machine learning was just overhyped—that in reality, there was not much work to be done in a machine learning project. I felt the models did all the work, and that it involved no programming at all. In fact, ML engineers don’t even need to know which patterns the models learned and used in the actual prediction.

My impression was that ML was monotonous. Every project took the same steps which were collecting and processing data, visualizing the data, and calling some outside function from a package to do the actual machine learning. This was boring.

Was this the machine learning I had always romanticized about? It was neither mentally engaging nor appealing in any way. It definitely isn’t computer science, I thought.

In computer science/programming I had learned to break my problems into smaller executable steps. I enjoyed defining a problem, designing my solution, and writing code while being attentive to time complexity by going back to refactor my code. I liked learning about algorithms and data structures, software architectures, etc. It made software development engaging and it wasn’t monotonous like ML was.

After a while, I began to see that this initial dislike for machine learning came from the fact that I was keener on writing code than solving a problem. My initial expectation was that I would code different models from scratch for every project, flexing my coding muscles again and again.

I later figured that the emphasis should rather be on problem-solving and not coding. These days I reuse a lot of previously-written code. In fact, I wouldn’t mind doing machine learning without writing any code.

Hyperparameter tuning/Watching a model train

Hyperparameter tuning

My inexperienced self also found hyperparameter tuning very mundane, as it felt like gambling to me because there was no formula for getting the right settings for the models besides using solutions from companies like google. So I picked any value for a hyperparameter, trained and validated repeatedly till I got the right one.

This turned out to be very repetitive and boring especially if the training stage took too long to complete. I did not like the processes of selecting values for the different parameters I had because I couldn’t really tell which would be the best value for that particular size of data or type of data. The clue from the data was usually not really enough to be sure of which to choose.

And so, I had to endure the stress and kept experimenting with values every time I undertook a project while loathing the whole process. The loathing wasn’t only because it was repetitive, it was also because I felt I wasn’t doing anything productive. I always thought — is this machine learning? Because it looked like a thing a kid would do for fun.

I have come to believe that experience and a deep understanding of the field will help you get the right hyperparameter for training your model faster.

For instance, in some cases, I choose to tune some hyperparameters instead of others, in the beginning, to reduce the training time.

A trivial example — when tuning a random forest model, I set my n_estimators, which is the number of independent decision trees in the ensemble, to a lower value, and only increased this value at the end once satisfied with the performance of the model. I realized that having too many trees in your ensemble means your model will train slowly.

What I’m trying to show here is that there are little tweaks you could add to a model training process to make it less time consuming, putting you in more control of the process and literally making the process less boring and tedious to you.

If you’re blindly tuning your models you will easily get bored — that is, setting a random value to a hyperparameter, testing, and changing that value to another random one, and again testing. This will wear you out in no time. So having a little bit of an idea of what you want to do and experimenting with the hyperparameters based on these ideas will make the process less boring.

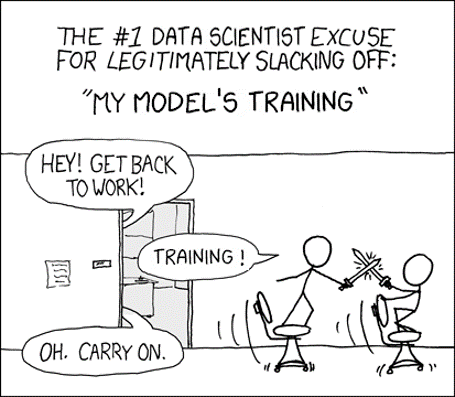

Watching a model train

I found the time spent in training an ML model to be unbearable. It was a huge part of the reason for my distaste for ML. I think the reason it was that way to me was because of the way I handled the hyperparameter tunning process.

I usually feel uneasy about the training step. I would have loved to have it happen faster so that I could focus on doing something else. Like trying a different value for a parameter, or starting a different project, or learning a new thing. I was more concerned about learning something new.

I often failed to resist the urge to imagine how much I would have achieved if I had rather focused on learning something else. Like learning Golang or trying to use graphql instead. I believed I would’ve achieved more with that time if I chose to do the latter.

The funny part was that, in my case, I couldn’t switch context to something else when training the models take too long to complete. If I did that, it meant I would have to start over, because my low-end laptop would freeze and I’d have to restart it.

These days, I’ve gotten used to waiting for my models to train because I use that time to write down different ideas I want to implement in the project. I write down the cross-validation tactics I wish to use or some feature generation ideas I wish to include.

Kaggle

I remember I once asked a friend if I could use Kaggle to build experience for a program I was interested in, he chuckled and replied me with a weird “…yiieah”. I became embarrassed because I felt my question made me look like I was after money.

I also wasn’t expecting to win any prize in Kaggle because I didn’t have the experience. Trying would be a waste of my time, so I avoided Kaggle.

I had always avoided Kaggle because I felt it was about the prizes, and that there wasn’t anything to learn in a competition because I felt practicing in the field would be different from what is obtained in a competition, just like how competitive programming is different from being a programmer in a tech company. I also didn’t want to be guilted or shamed by anyone, so I never talked about it. This turned out to be my biggest misconception.

I understood this better after I entered an in-class competition hosted on Kaggle by a MOOC. It was a binary classification problem, where we were tasked with predicting whether or not an employee of a company would be promoted.

I trained my model with a single random forest model and got a validation score of 99%. I was overjoyed. I rushed into Kaggle and uploaded my prediction, thinking I would rank among the top of the class, not knowing I was in for a shocker. I ranked 390th out of 530 participants with a score of 93%, with the best score in the competition being 97%.

It was after this competition that I got the idea of what Kaggle and machine learning was. Specifically, I quickly realized Kaggle wasn’t just a place to make money by playing with a model’s hyperparameter, but rather it was where machine learning could be actually learned.

There are lots of things that come to play in winning a Kaggle competition. These things are inherently, very useful—things like proper validation techniques and an understanding of the data you have.

A proper validation technique would have given me a validation score that would have been closer to my score on Kaggle. The same happens when developing for use outside of Kaggle if you don’t properly validate your model it won’t perform very well on new data. Kaggle teaches you to improve model accuracy without overfitting on train data. All of these are incredibly important lessons to learn.

Kaggle was also where I developed my intuition about ML, and I found the community there to be very resourceful. They teach you how to do real machine learning—the way it is done by experts.

Conclusion

You won’t always understand a concept until you’ve experimented with it yourself. You learn more by implementing ML concepts from scratch and by implementing them in your projects.

If possible, avoid MOOCs and instead read research papers and try implementing them on your own. It will teach you so much about the algorithms, which will help you in the future, especially if you plan to go into research.

There was a case I knew of where an intern was able to become a junior researcher by simply implementing research papers on computer vision without having any prior knowledge of ML—within a month.

In addition to that, get a mentor or join an online community. Having an experienced professional that you can ask for guidance can be very helpful in diverse ways. It’s also a good way to stay motivated and build a network!

Comments 0 Responses