There were quite a few interesting announcements during WWDC 2020. Without a doubt, enhancements in SwiftUI 2.0 and Apple’s bold decision to move away from Intel for Mac in favor of in-house Silicon chips became the major talking points.

But that didn’t stop Apple from showcasing its computer vision ambitions once again this year. The Vision framework has been enhanced with some exciting new updates for iOS 14.

Through iOS 13, Apple expanded the breadth of the Vision framework by introducing a variety of requests ranging from text recognition to built-in image classification and improvements in face recognition and detection.

In iOS 14, Apple has focused many of its efforts on increasing the depth of its Vision framework.

Primarily, the new Vision requests introduced in iOS 14 enable new ways to perform action classification and analysis, which will help developers build immersive gameplay experiences, fitness apps, and sports coaching-based applications (something that’s has seen a huge demand in recent times).

Let’s dig through the new Vision requests and utilities that were announced at WWDC 2020.

Vision Contour Detection Request

Contour detection finds outlines of shapes in an image. It looks to join all the continuous points that have the same color or intensity. This request finds good use in scenarios like coin detection and finding other objects in an image and grouping them by size, aspect ratio, etc.

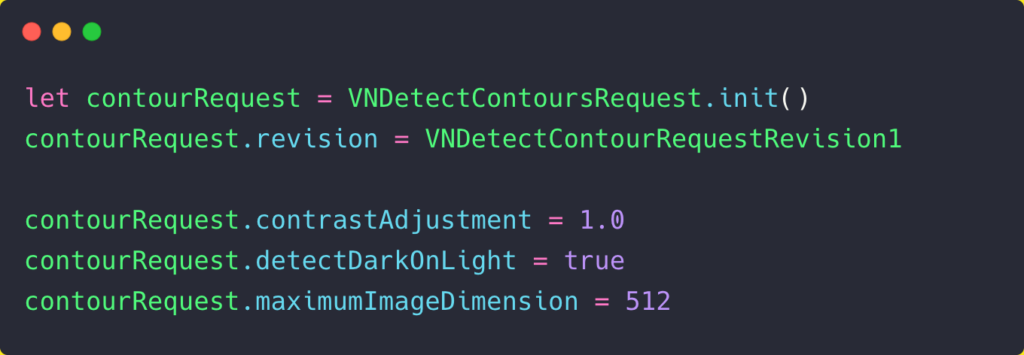

Here’s the sample code used to initiate a Vision contour detection request:

Like every Vision request, the idea is simple: pass the Vision request into a VNImageRequestHandler for images, and get the results in VNObservation.

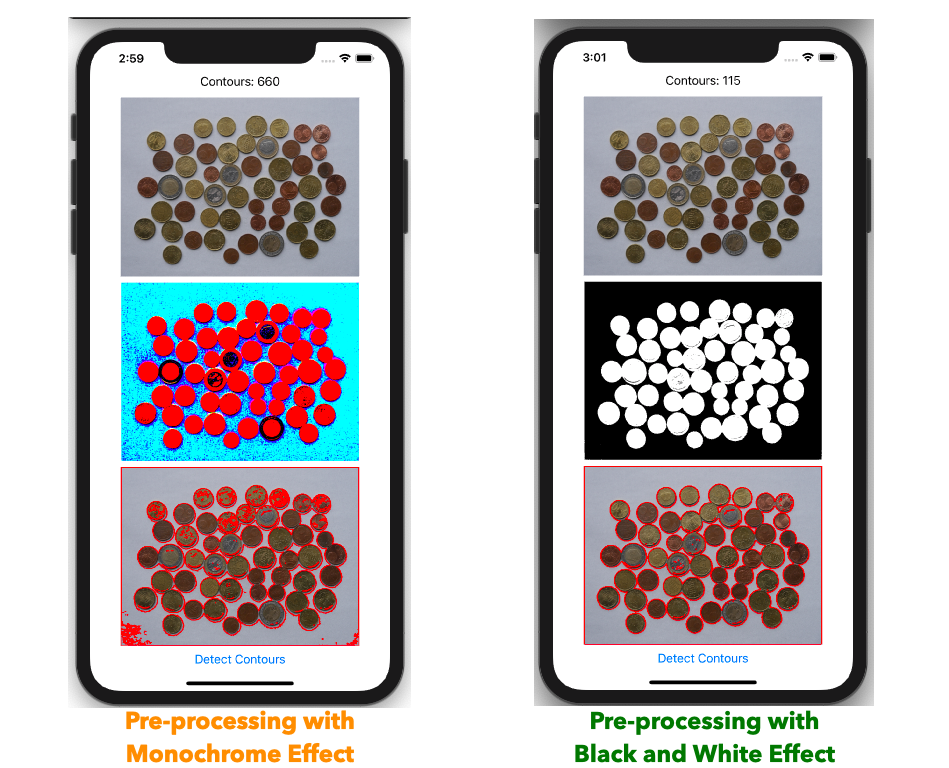

Core Image is a really handy framework for pre-processing images for contour detection requests. It helps smooth textures, which in turn lets us simplify contours and mask parts that aren’t in the region of interest.

The below screenshot shows what happens when you use the wrong filter for pre-processing (adds more contours instead of reducing them, in the left image):

VNGeometryUtils is also a handy utility class introduced this year for analyzing contour shapes, bounding circles, area, and diameter.

Optical Flow

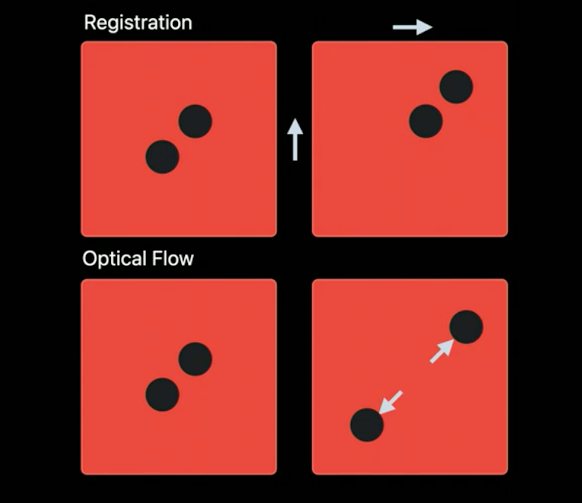

VNGenerateOpticalFlowRequest deals with the directional flow of individual pixels across frames, and is used in motion estimation, surveillance tracking, and video processing.

Unlike image registration, which tells you the alignment of the whole image with respect to another image, optical flow helps in analyzing only regions that have changed based on the pixel shifts.

Optical flow is one of the trickiest computer vision algorithms to master, primarily due to the fact that it’s highly sensitive to noise (even a shadow could play a huge role in changing the final results).

iOS 14 also introduced VNStatefulRequest, a subclass of VNImageRequest which takes into consideration the previous Vision results. This request is handy for optical flow and trajectory detection Vision requests, as it helps in building evidence over time.

Hand and Body Pose Estimation

After bolstering its face recognition technology with more refined detection and face capture quality requests last year, Apple has now introduced two new requests — hand and body pose estimation.

The new VNDetectHandPoseRequest has opened possibilities for building touchless, gesture-based applications based on the 21 returned landmark points in the VNRecognizedPointsObservation.

Drawing with hand gestures is now literally possible by tracking the thumb and index finger points. Amongst the other things, you can detect and track a given number of hands and also analyze the kinds of instruments a person is interacting or playing(example: guitars and keyboards).

Another exciting use case of hand pose is in auto-capturing selfies in your custom camera-based applications.

Human body pose estimation is another exciting addition to the Vision framework this year. By leveraging the VNDetectHumanBodyPoseRequest, you can identify different body poses of multiple people in a given frame.

From tracking if your exercise form is correct to determining the perfect action shot in a video to creating stromotion effects (by using different body poses of a person across frames and blending them), the possibilities of using body pose in camera-based applications are endless.

Trajectory Detection

VNDetectTrajectoriesRequest is used for identifying and analyzing trajectories across multiple frames of videos and live photos. It’s a stateful request, which implies previous Vision results are required to build evidence over time.

Trajectory detection requests would be extremely useful in analyzing the performances of sports athletes and build insights from it.

From soccer to golf to cricket, visualizing trajectories of balls would be entertaining for the end-user, in addition to providing important analytics.

VNDetectTrajectoriesRequest requires setting frameAnalysisSpacing for intervals at which the Vision request should run, along with trajectoryLength, to determine the number of points you’re looking to analyze in the trajectory.

Additionally, you can also set minimumObjectSize and maximumObjectSize to filter out the noise. The results (there would be multiple trajectories) returned in the VNTrajectoryObservation instance provides us with detected and projected points. Project points are extremely handy for estimating where the target object is in real-world space. Just imagine—building a hawk-eye projection is now possible on-device using Vision in iOS 14.

A Utility For Offline Vision Video Processing

Last but not the least is the new utility class VNVideoProcessor, which lets you perform Vision requests on videos offline. VNVideoProcess lets you pass a video asset URL, set the time range for which you want to run the Vision requests, and add or remove requests easily.

Now, this might not look fancy, but it’s the most underrated introduction in the Vision framework this year.

From detecting anomalies in videos to analyzing if the content is NSFW or not before playing, the possibilities of this utility class are truly limitless. Moreover, trajectory detection and optical flow requests require a steady camera, preferably with a tripod, which makes the introduction of the offline video processor a great addition.

As a side note, with the introduction of a native VideoPlayer in SwiftUI 2.0 this year, VNVideoProcessor will only boost pure SwiftUI computer vision applications that do more than just image processing. The SwiftUI Video Player and VNVideoProcessor are a match made in heaven.

Conclusion

Apple’s Vision framework has been regularly receiving interesting updates over the years, and WWDC 2020 has only pushed the envelope further.

From the look of it, the collection of Vision requests introduced this year— namely, hand and pose, trajectory detection, and optical flow—are key players for augmented reality and might eventually play a huge role in how users interact with Apple’s much-anticipated AR glasses.

By introducing these exciting Vision requests this year, the Cupertino tech giant has just showcased the possibilities and new use cases of computer vision in mobile applications.

Comments 0 Responses