If I gave you 30 minutes and a reasonably powerful computer, you could train a machine learning model to recognize dozens of breeds of dogs with higher accuracy than most humans.

This is partially due to great tools and frameworks like TensorFlow or PyTorch, but it’s also due to the availability of data. At some point, generous researchers decided to take the time to label a few thousand images of pets and release that data publicly.

Try to train a similar model for species of fish, and you’ll find yourself spending weeks or thousands of dollars sourcing and labeling data yourself.

This is machine learning’s cold start problem. The cost of data acquisition is prohibitive, and it keeps many from even starting. And even if you can get started, more data is better, and the big companies have all of it. The data moat is already too wide and the rich will just get richer — or at least that’s how the logic goes.

Over the past few years, though, a new data source has emerged and it’s radically changing the economics of machine learning: synthetic data. Rather than collecting and annotating data by hand, we’re getting better at creating it programmatically, and in some cases, it’s even better for training models than the stuff collected from the real world. A bridge over the data moat, if you will.

What is synthetic data?

Synthetic data is data that’s generated programmatically. For example: photorealistic images of objects in arbitrary scenes rendered using video game engines or audio generated by a speech synthesis model from known text. It’s not unlike traditional data augmentation where crops, flips, rotations, and distortions are used to increase the variety of data that models have to learn from. Synthetically generated data takes those same concepts even further.

Most of today’s synthetic data is visual. Tools and techniques developed to create photorealistic graphics in movies and computer games are repurposed to create the training data needed for machine learning. Not only can these rendering engines produce arbitrary numbers of images, they can also produce the annotations, too. Bounding boxes, segmentation masks, depth maps, and any other metadata is output right alongside pictures, making it simple to build pipelines that produce their own data.

Synthetic data isn’t limited to physics-based rendering engines. Generative models like GANs and VAEs are producing results good enough for training. Google, for example, recently mixed audio clips generated from speech synthesis models with real data while training the latest version of their automatic speech recognition network.

Because samples are generated programmatically along with annotations, synthetic datasets are far cheaper to produce than traditional ones. That means we can create more data and iterate more often to produce better results. Need to add another class to your model? No problem. Need to add another keypoint to the annotation? Done.

What sorts of models can I train with synthetic data?

The models that can be trained with synthetic data are limited by the richness of the data we can produce. In the case of images created with 3D rendering software, we’re able to replicate much of the richness of a real image, thanks to our understanding of light and physics. Producing a synthetic dataset of electronic medical records is much harder. We don’t have as complete an understanding of the process that generates them.

Within computer vision, though, it’s possible to train models to perform many common tasks based entirely on synthetic data. Object detection, segmentation, optical flow, pose estimation, and depth estimation are all achievable with today’s tools. In audio processing, automatic speech recognition, audio denoising, and speaker isolation tasks can also make use of generated data. Finally, reinforcement learning has benefited greatly from the ability to test policies in simulated environments, making it possible to train models for self-driving cars and robots that sit on factory floors.

With most of these tasks, though, synthetic data is useful for training models with limited scope. Training a model to estimate hand position or recognize a single toy is achievable, but detecting hundreds of objects will likely require advanced data generation and modeling knowledge.

Where synthetic data really shines is with its ability to produce data and annotations for nearly limitless objects and tasks. Every product on a store shelf can be scanned and rendered as a 3D object. Positions and orientations of even the most complicated objects can be tracked programmatically.

Does it really work?

I was pretty skeptical at first, but over the past year, I’ve seen more and more models trained on majority or entirely synthetically-generated datasets.

As early as 2016, a dataset known as SceneNet was released, containing millions of images of rendered 3D interiors. Images are accompanied by depth and and segmentation maps, among other annotations. With additional augmentation, researchers were able to train models on purely synthetic data that achieved near state-of-the-art performance on depth prediction tasks (pdf link).

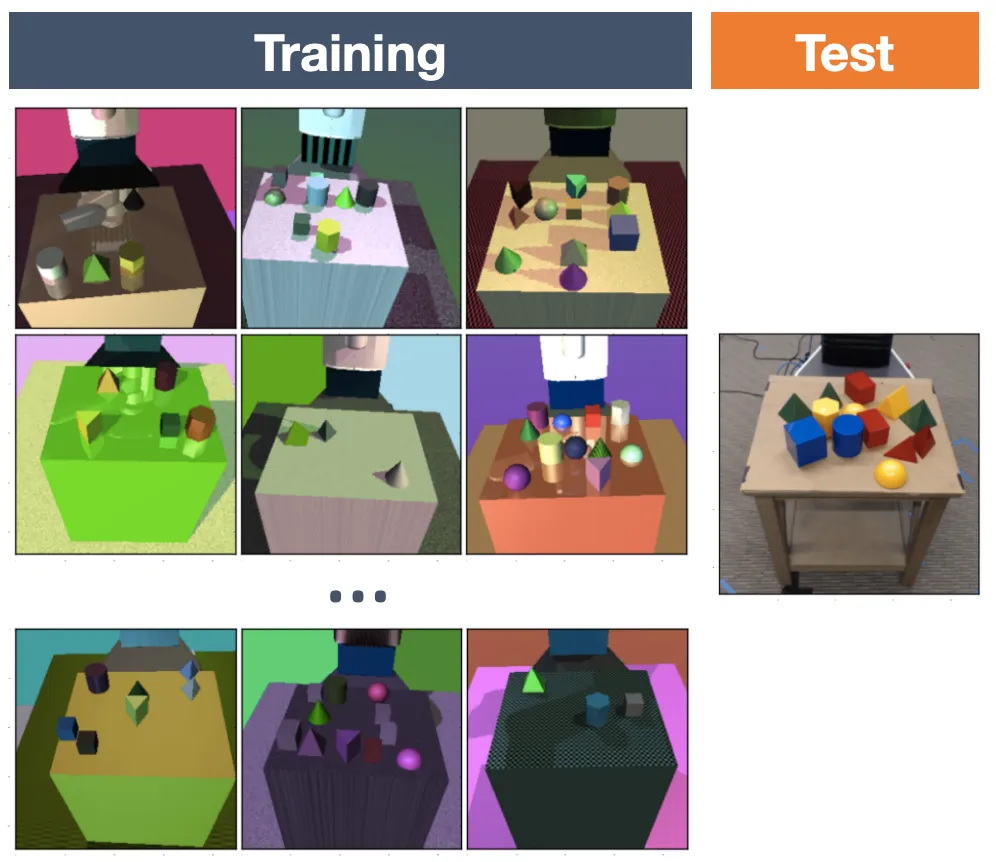

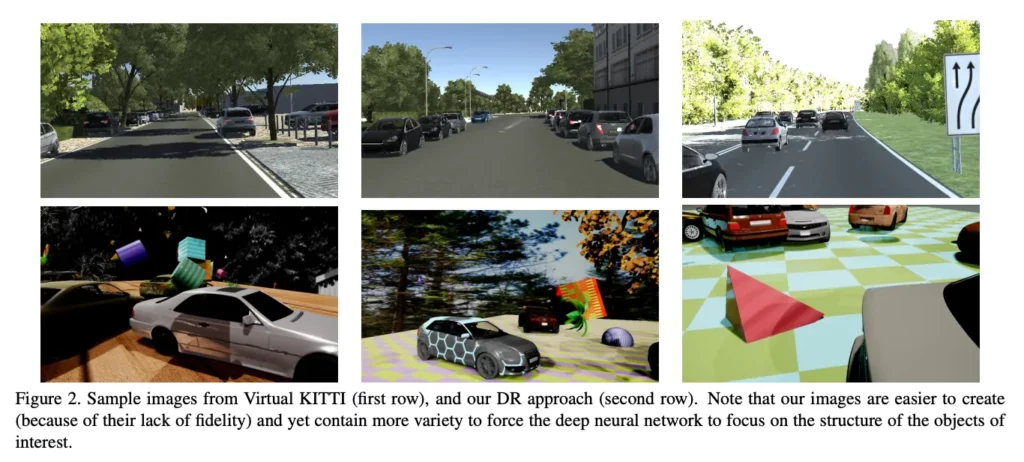

In 2017, a technique known as domain randomization was introduced. Rather than producing photorealistic synthetic images, extremely random, non-realistic scenes were produced for training. Incredibly, this improved performance, with researchers theorizing that the random nature of the scenes forced networks to focus on only the most intrinsic characteristics of objects it was trying to detect. The models trained on entirely synthetic data were transferred to real robots, which were able to locate physical objects on a table to within 1.5cm accuracies (pdf link). NVIDIA researchers took this technique even further in 2018, achieving near state-of-the-art performance on object detection in complex street scenes used to validate models for self-driving cars (pdf link).

Commercially, companies are beginning to catch on. AI.Reverie uses a customized rendering engine to create elaborate synthetic datasets, containing everything from construction sites to herds of wild animals. Unity has released an open source ImageSynthesis library that makes it trivial to output various types of annotation data. Laan Labs was able to use this tool to train a 3D pose estimation model for cars entirely on synthetic data.

How can you get started?

If you’re ready to get started, I recommend starting with a tool like Unity, Unreal Engine, or Blender. They’re cheap (or free), have vibrant developer communities where you can find help and assets, and are easily customizable through scripting.

In about two weeks, I was able to learn enough of Unity to generate thousands of images and annotations of Coke cans and train an object detection model that works shockingly well in the real world. I found this tutorial particularly helpful.

Limitations of synthetic data

While I’m bullish on the future of synthetic data for machine learning, there are a few limitations. Some of them are technical, while others are related to business:

- Simple, singular tasks like “detect this specific product” are easy, but more complex tasks like “detect hundreds of species of animals” are still difficult.

- We don’t have great tools to generate non-visual data such as text or speech.

- If you don’t have a good physical model, it’s hard to generate high quality data. How do we generate synthetic MRI data from a brain without a detailed digital model of a brain to begin with?

- From a business perspective, synthetic data turns many models into commodities in the long run. If data required to train a model can be generated for nearly any object, what does that do to the value of the resulting model?

- Companies selling synthetic data have an alignment problem with customers. Customers want the data to train the model, but if it’s the model that’s valuable, these companies should keep the data they generate and license the model instead. Will customers accept this?

Conclusion

Synthetic data is going to make machine learning accessible to a huge number of people and problems. Computer vision tasks will be the lowest hanging fruit thanks to advanced rendering software that can produce photorealistic images and accompanying annotations. Generating enough data to train a model will soon be as easy as finding an appropriate 3D render of an object in an asset store.

Comments 0 Responses