Machine learning can help us take the best route back home, find a product that matches our needs, or even help us schedule hair salon appointments. If we take an optimistic view, by applying machine learning in our projects, we can make our lives better and even move society forward.

Mobile phones are already a huge part of our lives, and combining them with the power of machine learning is something that, in theory, can create user experiences that delight and impress users. But do we really need to add machine learning to our apps? And if so, what tools and platforms are currently at our disposal? That’s what we’ll talk about in this article.

To employ machine learning, you need to have a trained model. Let’s see how you can use a model inside your app. You have two options:

1 — Create (train) a new model

2 — Use a pre-trained model

The first case is more problem-specific. For example, let’s say you have an app where users start discussions, and you need to automatically classify each discussion into a general topic. This relates to the data of your app, so you’ll probably build a model for this task (as a model trained with data from another app would probably not produce good results for you).

To build a model, we need to have available data, so prior to thinking about how to deploy a model, the first step should be deciding how to collect this data.

But now imagine you need to add text-to-speech functionality to your app. Training a model to do that requires a lot more work (and data), so it makes sense to use a pre-trained deep learning model to do that. Usually when we need to use a functionality that humans are good at (speech, vision, etc.), we use a deep learning model.

These are the two primary mechanisms for applying machine learning algorithms; thus, these are also our options for applying machine learning inside our mobile apps. With that in mind, the Introduction to TensorFlow Mobile documentation raises an important question:

Step 1: Is your problem solvable by mobile machine learning?

Think about the problem you app solves. Is there anything you can do with machine learning that will bring more value to a user? Is it feasible?

There are a lot of applications for predictive models. Whether your goal is to make a machine learning model the main asset of your application or you just want to use a model to improve a service, two important things to keep in mind are “Will it add value to users?” and “Does the value justify the efforts?”

Now that we’ve considered these key questions, let’s see how we can deploy a machine learning model into your mobile app.

Step 2: Assess your requirements to deploy the models

In terms of computational resources, making predictions (inference) is less costly than training. On-device inference means that the models need to be loaded into RAM, which also requires significant computational time on the GPU or CPU. There are some advances to leveraging these limitations, like Apple’s Face ID, which uses a “teacher-student” training approach (An On-device Deep Neural Network for Face Detection).

But in general, training a model inside your app isn’t a good idea today. While making predictions without the need for a cloud-based web service is possible (and in some cases easy) with today’s hardware, there’s also the cloud-based web services option.

Larry O’Brien describes three reasons why you should use on-device inference vs. cloud-based services:

Usually the cloud-based services are paid, so we also need to take this into account.

Another thing you need to consider is if you’ll use a custom model (a model trained with your own data) or use a pre-trained one. To use pre-trained models, you don’t necessarily need a data scientist, but the second option will probably require one.

Step 3: Deploying the models

Now let’s take a look at some options available today to deploy models to mobile apps.

ML Kit for Firebase

Features:

☑️ On-device inference

☑️ Cloud-based web service

☑️ Pre-trained models available

☑️ You can use your own models

⬜️ Development tools to help you work with the models

☑️ Paid (but with free and low cost options)

Introduced at this year’s Google I/O, ML Kit for Firebase offers five ready-to-use (“base”) APIs, and you can also deploy your own TensorFlow Lite models if you don’t find a base API that covers your use case.

The tool gives you both on-device and Cloud APIs, meaning you can also use the APIs when there’s no network connection. The cloud-based APIs make use of the Google Cloud Platform.

With ML Kit you can upload the models through the Firebase console and let the service take care of hosting and serving the models to the app’s users. Another advantage is that since ML Kit is available through Firebase, it’s possible to take advantage of the broader Firebase platform.

They also decided to invest in model compression:

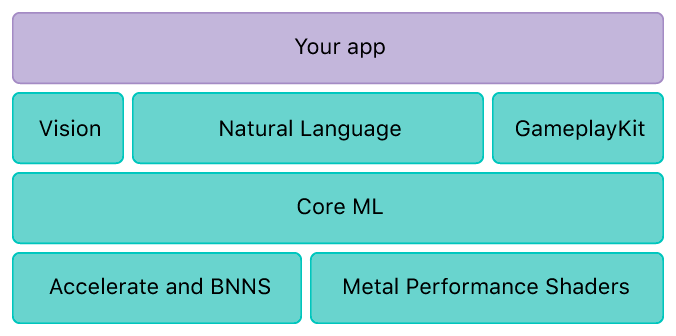

Core ML (Apple)

Features:

☑️ On-device inference

⬜️ Cloud-based web service (but you can use the IBM Watson Services for Core ML)

☑️ Pre-trained models available

☑️ You can use your own models

☑️ Development tools to help you work with the models

⬜️ Paid

With Core ML, you can build your own model or use a pre-trained model.

Building your own model

To use your own model, you first need to create a model using third-party frameworks. Then you convert your model to the Core ML model format.

Today, the supported frameworks are scikit-learn 0.18, Keras 1.2.2+, Caffe v1, and XGBoost 0.6. More info here. It’s also possible to “create your own conversion tool when you need to convert a model that isn’t in a format supported by the tools.”

Using pre-trained models

You can also just download and use some pre-trained models. Options include:

There are also tools built on top of Core ML to help you work with the models:

- Vision for image analysis

- Natural Language for natural language processing

- GameplayKit for evaluating learned decision trees

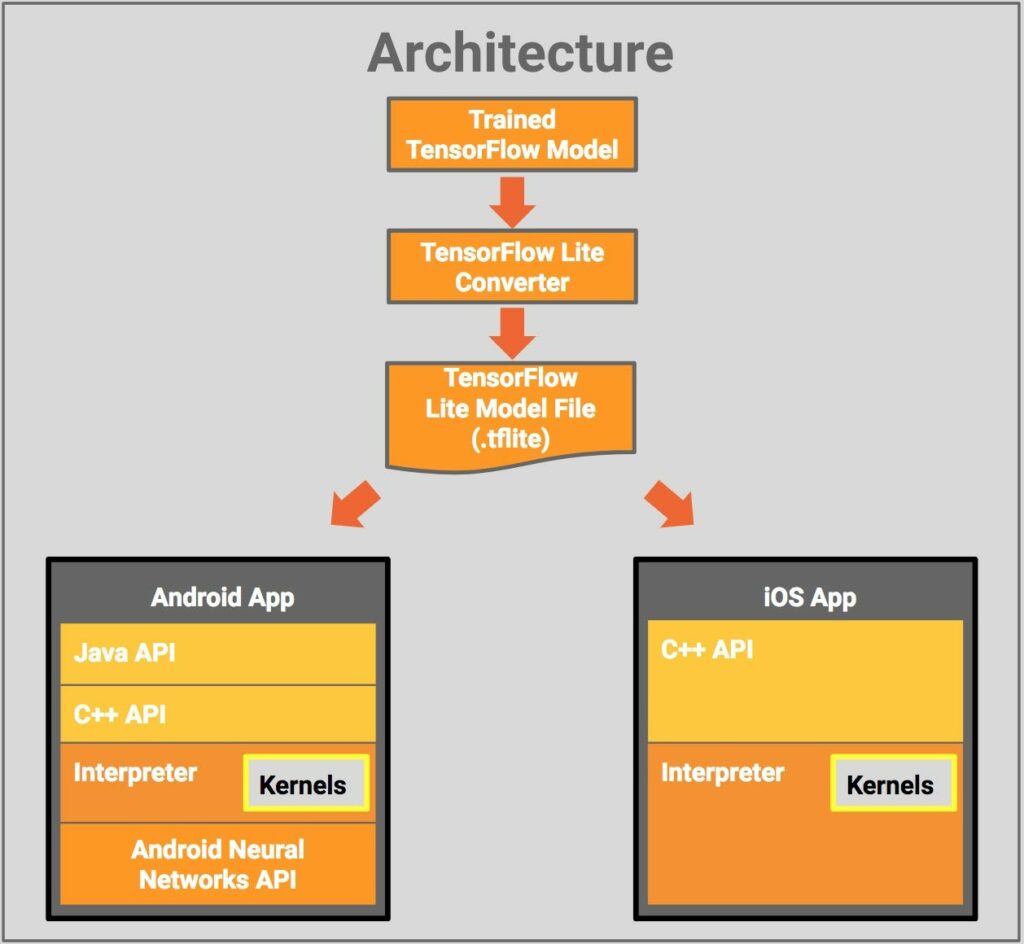

TensorFlow Mobile (Google)

Features:

☑️ On-device inference

⬜️ Cloud-based web service

⬜️ Pre-trained models available

☑️ You can use your own models

⬜️ Development tools to help you work with the models

⬜️ Paid

To use TensorFlow Mobile, you need to have a TensorFlow model that’s successfully working in a desktop environment. Actually, there are two options: TensorFlow for Mobile and TensorFlow Lite. TensorFlow Lite is an evolution of TensorFlow Mobile, where models will have a smaller binary size, fewer dependencies, and better performance.

It works with both Android and iOS devices.

Cloud-based web services

⬜️ On-device inference

☑️ Cloud-based web service

☑️ Pre-trained models available

⬜️ You can use your own models

⬜️ Development tools to help you work with the models

☑️ Paid

There are some cloud-based services like Clarifai (vision AI solutions), Google Cloud’s AI (machine learning services with pre-trained models and a service to generate your own tailored models), IBM Cloud solutions, and Amazon Machine Learning solutions.

These options seem to be the best choice for companies and startups that need to outsource their machine learning services because they don’t have in-house expertise (or don’t want to hire people for that).

Conclusion

Before falling for the machine learning hype, it’s important to think about the service your app provides, examine whether machine learning can improve the quality of the service, and decide if this improvement justifies the effort of deploying a machine learning model.

To deploy models, ML Kit for Firebase looks promising and seems like a good choice for startups or developers just starting out. Core ML and TensorFlow Mobile are good options if you have mobile developers on your team or if you’re willing to get your hands a little dirty.

In the meantime, cloud-based web services are the best option if you just want a simple API endpoint and don’t want to spend much time deploying a model. To give an idea about costs, the monthly price for Amazon ML batch predictions is $0.10 per 1000 predictions (while for on-device inference this is free).

Have you used any of these services (or frameworks)? How was your experience?

Thanks for reading!

Discuss this post on Hacker News.

Comments 0 Responses