The definition of an edge device can vary greatly from application to application, and it includes devices ranging from smartwatches to self-driving cars and everything in between. Currently, the edge devices with the largest numbers, which also have a connection to a network, is likely the smartphone.

There are increasingly a lot of other devices with small MCU’s (microcontrollers) that aren’t connected to any network which can be used for applications like intelligent sprinkler system for home garden.

To be more specific, the total number of smartphones worldwide is in the billions. The number of connected intelligent devices is ever-increasing—from intelligent thermostats, to doorbells, to delivery robots. Most of these devices run on battery (there are exceptions like smart assistants) and are connected to wifi, bluetooth and/or mobile networks.

When it comes to machine learning on these devices, a common process sends device sensor data back to the cloud for inference, which:

- Consumes more power (moving data over a wired or wireless network consumes energy).

- Causes applications to suffer from higher latency.

- Costs more due to data transmission and cloud computing costs.

- Requires extra work to deal with data privacy and security issues.

These high-level benefits make it critical to embed machine learning models on-device, which also leads to more data privacy and security.

When it comes to ML, in terms of compute, only inference is performed in most edge applications—however, with emerging techniques like federated learning, that may change in the future.

But for now, it has become possible to run machine learning models on small microcontrollers and other edge devices, which will lead to a wide variety of interesting applications. Read on to learn how to get started with developing machine learning applications for the edge!

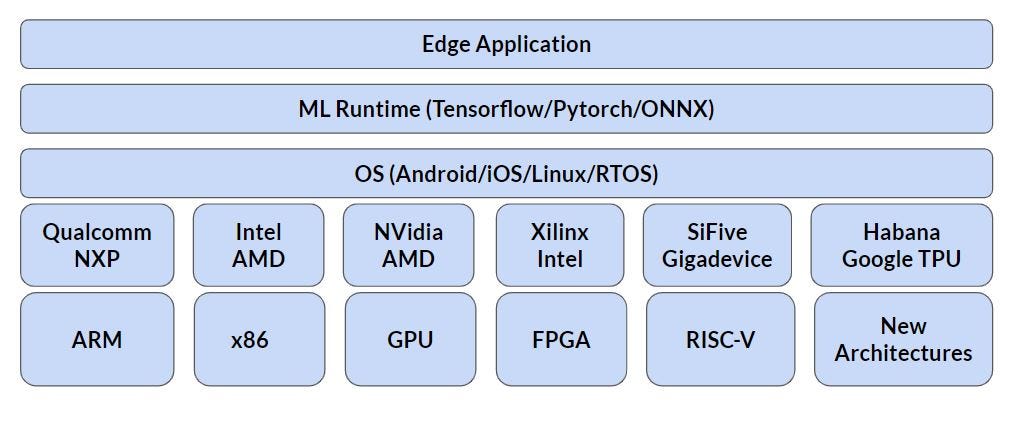

Edge Architecture

As there are different type of edge products, their architectures are also different. The different architectures in use today can be grouped into 5–6 categories, as shown below:

ARM Cortex-A architecture-based processors are powering almost all the smartphones available today, whereas the Cortex-M based microcontrollers are used in keyboards, smartwatches, security cameras, and a large number of other products around us.

x86-based laptops, netbooks, etc. are also used by millions of people, and they are used in ATM’s and various other products. Some smartphones also contain custom machine learning accelerators developed by companies like Qualcomm and Huawei.

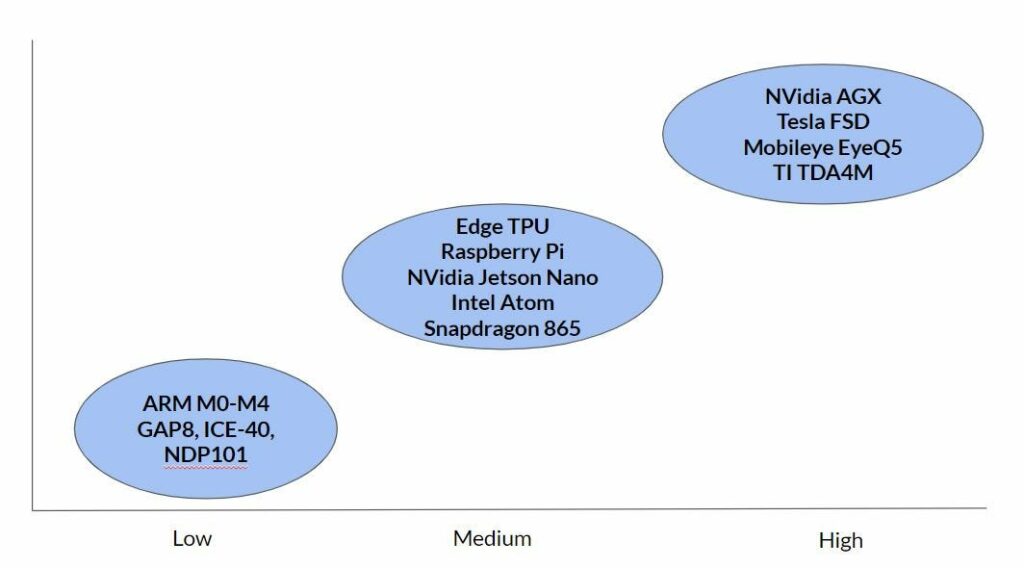

In terms of power, the different products can be grouped into three categories, from the ultra-low power Cortex-M series MCU’s, to the high-performance autonomous car-specific systems from NVIDIA, Mobileye, and Texas Instruments.

ARM has recently launched their Ethos-N series processors with an NPU (Neural Processing Unit), which, in combination with the Cortex-M processors, can be used in a wide variety of low-power applications. NDP101 is a low power SoC for keyword classification in speech applications..

You can learn more about new deep learning accelerators here:

Models Zoo’s and Models

As the amount of compute and memory is limited on edge devices, the key properties of edge machine learning models are:

- Small model size — Can be achieved by quantization, pruning, etc

- Less computation — Can be achieved using less layers and different operations like depthwise convolutions.

- Inference only — In most cases, only inference is performed on-device.

A given model’s optimization process will differ from application to application—in some applications, like autonomous vehicles, which require the highest level of accuracy, model compression techniques like quantization might not be performed.

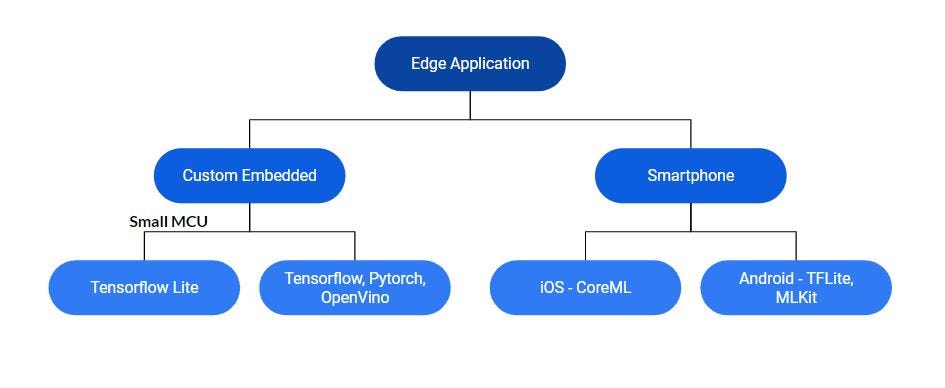

Pre-trained models optimized for specific platforms can provide significant boosts in inference speed, and they can also be used for transfer learning to train application-specific models. They also provide a good baseline to compare custom models with, and can help development teams get to an MVP faster. TensorFlow and PyTorch are the two most popular frameworks for machine learning and they can run on any Linux compatible board like Raspberry Pi and NVidia Jetson .Below are some of the model zoo’s and libraries specific to edge models.

- Intel OpenVINO Model Zoo — Intel has provided a large number of pre-trained models optimized for Intel x86 CPU’s. Free models are available for use cases like face detection, pedestrian detection, human pose estimation etc. The OpenVino toolkit can be used to optimize custom models for Intel products.

- iOS Core ML — Distilled version of state-of-the-art models like GPT-2 and BERT are available to be used on iOS devices.

- TensorFlow Lite — is part of the TensorFlow ecosystem and can be used to deploy ML models on Android, iOS and various other edge devices. Pre-trained models are available object detection, pose estimation, Q&A using mobileBert and more for Android. ML Kit is another option within the Firebase ecosystem and also works on iOS. ML Kit also has a vision API which can be used to recognize images from 400 common objects and can also use AutoML vision Edge to train custom models.

- PyTorch Mobile — is part of the PyTorch ecosystem and supports Android, iOS and other edge devices. PySyft is a library for privacy preserving ML that works with PyTorch and can be used on some edge devices.

- PocketFlow is an open source project from Tencent for automatic compression and acceleration of deep learning models.

- ARM CMSIS NN — This library provides an efficient implementation of common operations like convolution for ARM Cortex-M series microcontrollers. In this paper on CMSIS-NN ARM has described various optimizations done to run a CNN on Cortex-M7 MCU. There are several example here.

- Coral Edge TPU — Pre-trained models for the Edge TPU are available for image classification, object detection and image segmentation etc.

Cloud Platforms

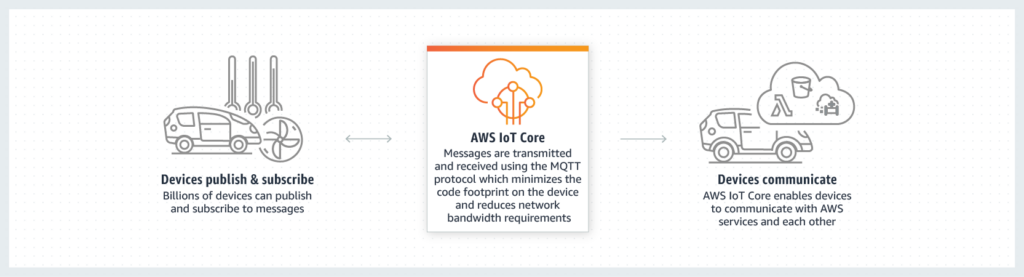

- AWS IoT—Includes Amazon FreeRTOS, AWS IoT Core, and various other services to manage and develop edge applications.

- Azure IoT — Includes services like Azure Sphere, which helps with security and deployment. They have worked with MediaTek to develop the MT3620 chip with built-in security, and development kits based on that chip are certified for Azure’s IoT platform.

Hardware

Sensors

In edge applications, sensors are the key because they help us collect and measure different types of information about the environment, such as temperature, sound, images, motion, humidity, and more. Below are just some of the interesting sensors —

- ST LSM6DSOX Inertial Sensor — This is a inertial sensor with a 3-axis accelerometer and a 3-axis gyroscope. It also has a machine learning core which has 8 independent decision trees which can be used to classify motion patters.

- Sensor Tag, Sensor Tile, and Sensor Puck are development kits with BLE (Bluetooth low energy) and multiple environment sensors like humidity, temperature, and ambient light.

Edge Development Kits

Several companies (a long list of such companies here) are working on deep learning accelerators for training/inference of deep neural networks; however, very few products are available to developers to start developing today. Below is a list of development boards you can buy today for developing edge AI applications:

Low Power Boards

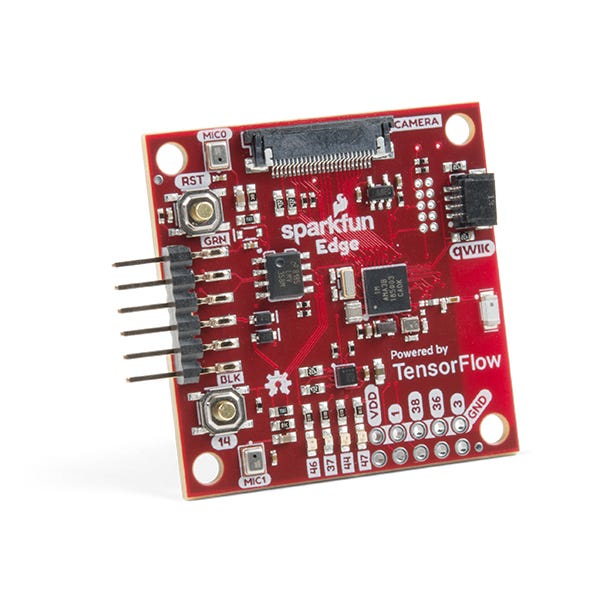

- SparkFun Edge board with Ambiq Apollo3 MCU — The Apollo 3 from Ambiq Micro is one of the most efficient and low power 32 bit Cortex-M4F microcontrollers. There are examples here to do wake word detection and build a magic wand!. Cost — $14.95

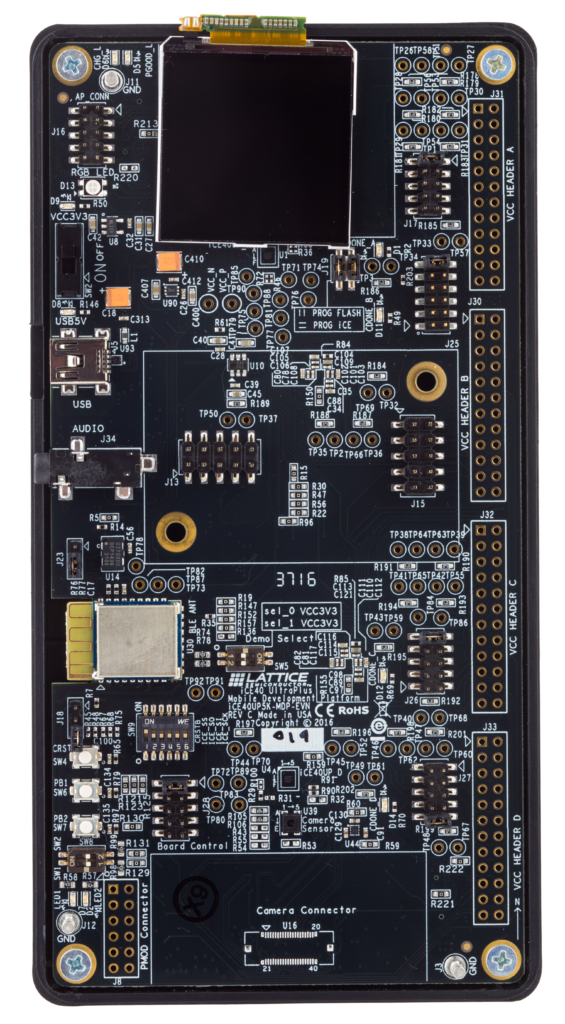

- Lattice iCE40 UltraPlus FPGA Platform — This FPGA based development kit from Lattice can be used to run small CNN (4–8 layers) models for applications like key word detection, face detection etc. The development kit with various sensors (microphone, inertial sensors etc) is available here. Cost — $99

Medium Power Boards

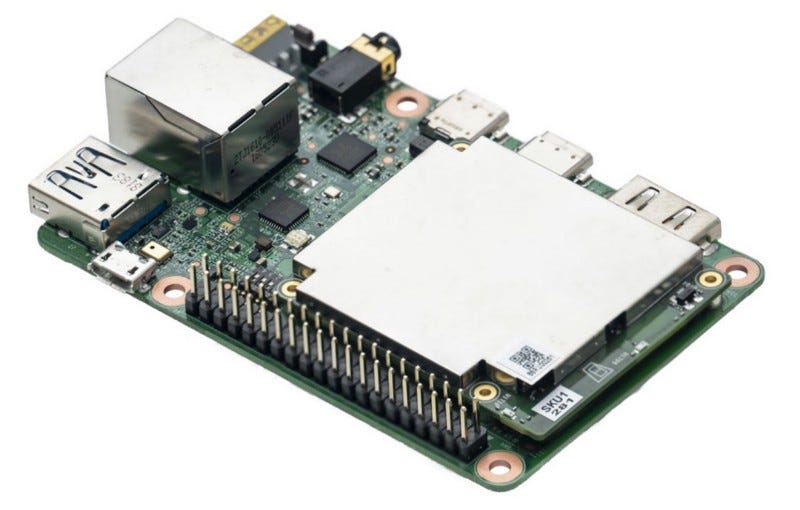

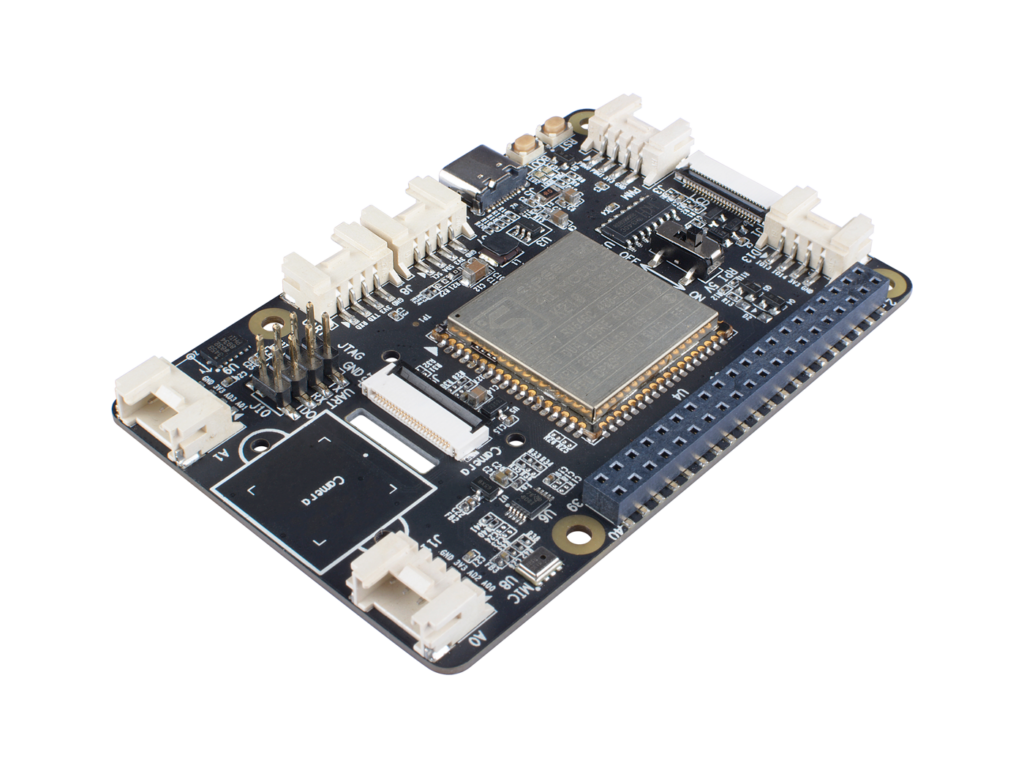

- Google Edge TPU — Google announced their Edge TPU a while back, but now they’re available to purchase and they are part of the Coral platform. The dev board also contains a Cortex-A53 SoC from NXP and can be used independently and has connectors for camera etc. It can be programmed using TensorFlow Lite. Google has provided some performance numbers here. The dev board (pictured above) can be purchased here. Cost — $149.9

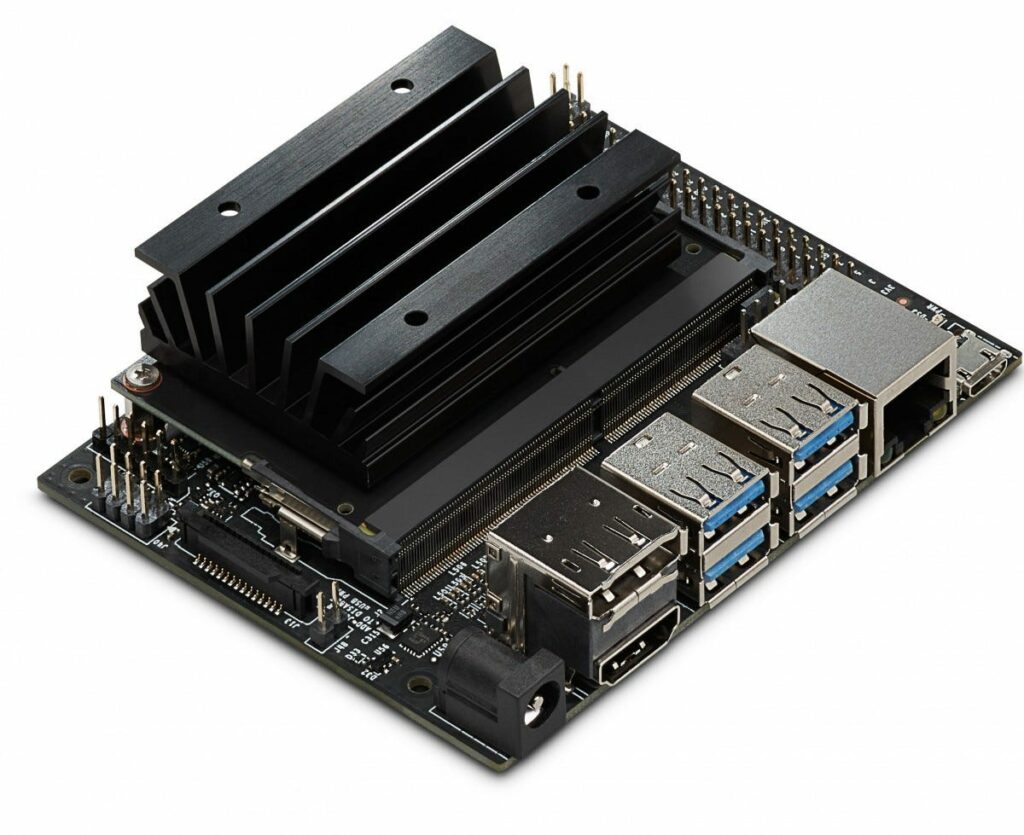

- NVIDIA Jetson Nano — This is the latest deep learning development board for Edge AI released by Nvidia at GTC 2019. One of the key advantages of the Jetson Nano is that it also includes a Quadcore ARM CPU which can run linux OS whereas the Intel NCS USB stick and Edge TPU USB stick require a separate board like RPi etc. It can be programmed using Tensorflow, Pytorch. Cost — $99.

For a more detailed comparison of the Coral Edge TPU Dev board and NVIDIA’s Jetson Nano, check out the following blog post:

- Intel Neural Compute Stick 2 — This USB form factor development board is powered by an Intel Myriad X Vision processing unit (VPU). Before Myriad X, Intel/Movidius had launched Myriad 2 which power the 1st Gen of the Intel Neural Compute stick. To program, the Intel OpenVino toolkit has to be used. Purchase here. Cost — $99

- Coral USB Accelerator — This contains the Google Edge TPU in a USB form factor similar to the NCS 2 and it can be used with the Raspberry Pi or any laptop. Cost — $74.99

- Sipeed MAix M1 (RISC-V + Kendryte 120) — This a Grove Hat for Raspberry Pi and only costs $24.50 currently ($28.90 after pre-order). The Hat has a dual core 600 MHz RISC-V CPU and Kendryte K120 16-bit neural network processor. Cost — $24.50

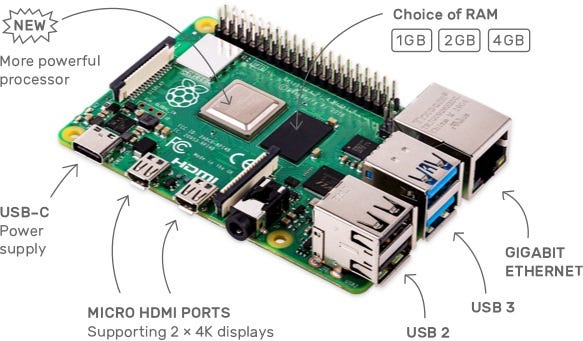

- Raspberry Pi: The RPi, which uses a quad-core ARM Cortex-A7 series processor, can be combined with the Edge TPU USB stick to make a powerful and easy-to-use edge AI platform. Cost — $35

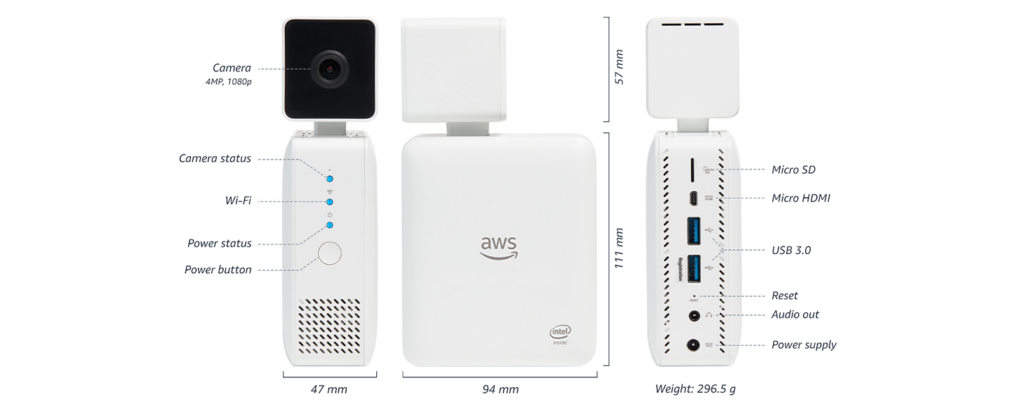

AWS DeepLens — This is a kit from AWS targeted towards developers working on computer vision applications. It can run inference on the Intel Atom processor and can be easily integrated with AWS IoT services to send the results of the inference to the cloud. Cost — $199

Conclusion

Developing AI applications at the edge requires working with constrained resources (memory, compute etc) and optimizing for cost, power and area—all of which makes it one of the most challenging problems in AI today.

With the rise of connected devices and the evolution of software for application development on these embedded devices, we’ll see a lot more edge applications using machine learning to solve new problems in the area of healthcare, energy savings, and robotics, just to name a few.

If you liked this post, feel free to share and 👏. Also, to learn more about edge AI and IoT, you can follow me on Twitter: https://twitter.com/MSuryavansh

Comments 0 Responses