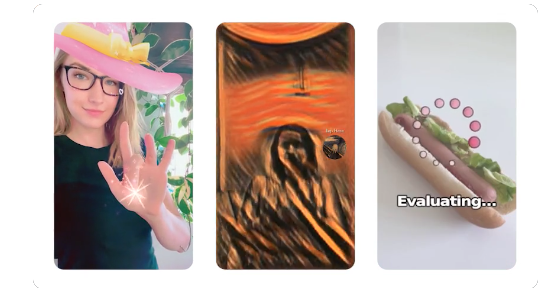

For years now, Snapchat has been at the forefront of mobile machine learning — their popular Lenses, which often combine on-device ML models with augmented reality, have become shining examples of the power and flexibility of on-device machine learning.

Given our respect and admiration for Snap’s work in this area, our team was thrilled to hear about the recent release of SnapML, Snap’s new ML framework inside their development platform Lens Studio (released with 3.0).

How SnapML Works

Engineers, dev teams, and other creators can drop custom ML models directly into Snapchat — these models must be, generally speaking, compatible with the ONNX model format. Some standard data pre- and post-processing methods can be applied in Lens Studio. Alternatively, these methods can be baked into the model architecture.

Custom models are integrated using Snap’s ML Component, which defines model inputs and outputs and how the model should run (i.e. every frame, upon a particular user action, in real-time, etc.).

These models must be built outside of Lens Studio and imported. To help with that, there are templates available (via Jupyter Notebooks) for a number of use cases: classification, object detection, style transfer, custom segmentation, and ground segmentation. You can use these as a starting point for your own custom models — especially if your use case matches one of these ML tasks.

Perhaps most importantly, because these models can be used directly in Snapchat as Lenses, they can quickly become available to millions of users around the world.

What SnapML could mean for ML engineers and teams

The above functionality, combined with Snap Lenses as a promising distribution channel, presents ML teams with a number of intriguing value props:

- Reduced barriers to entry: The ability to drop custom neural networks into Lens Studio, and then distribute them to Snap’s millions of users, is an exciting possibility. This means that teams have both a promising channel and a deployment target that doesn’t require developing an entire mobile application from scratch.

- Easier experimentation: Since teams won’t have to work through a full app release cycle to see their on-device models in action, it’ll be easier to experiment with brand- and product-based Lenses powered by immersive ML features.

- Democratized on-device ML: Part of our vision at Fritz AI is to build a product that helps democratize mobile machine learning, both through the underlying technology and the tools that make it possible. In our recent interview with one of SnapML’s early partners and creators, Hart Woolery of 2020CV, he noted that SnapML’s potential reminds him, in some ways, of how YouTube helped democratize video creation.

Our Technical Overview

To get a sense of what it’s like to build custom experiences with SnapML in Lens Studio, I worked through a simple demo project. While I’ll cover some of the unique challenges of that project in my next blog post, I wanted to take a bit of time to provide a high-level technical overview of the new platform — what it does well, where things get tricky, and some nuances that are essential to consider when getting started.

Things SnapML Does Well

First, a look at things SnapML does really well:

- One of my favorite features involves controlling how the model is run (e.g. every frame, when a user takes an action, in another thread). This allows degrees of control that really allow you to fine-tune the experience to fit your project requirements, while also experimenting with interactive and immersive elements.

- You can also loop a sample video inside Lens Studio to see what your Lens does as you’re creating and editing it. I used my own images in the preview pane, as well as live camera feed. I didn’t try using my own video clip, but I imagine that works, too. This kind of real-time visualization is a nice touch and one that makes experimentation even easier.

- There’s also an impressive OTA model download UX. Scan a QR code to pair your device, click a button in Lens Studio to send the model to your phone, get a notification when it downloads, and it shows up as a Lens. I love how simple this ended up being, as model delivery can be a pain, especially when you have to work through a full app update to release a new ML feature.

- As mentioned above, the under-the-hood work that Snap ML does is also a positive. There are still some lingering issues here (more on this below), but things like model conversion and pre- and post-processing help speed up project workflows significantly.

Areas for Improvement in SnapML

- Data can be difficult. In the demo projects and notebooks provided by Snap, they’ve used COCO as their data source. Using COCO as the data source means that the category of objects you want to use for model training must be part of the COCO dataset, it requires writing code to extract the images/annotations of interest, and you have no control over the quality of the annotations. As such, working with unique or custom data is currently a bit of a pain point.

- Model training. Snap uses PyTorch to train a custom segmentation model in their example Jupyter Notebook. They recommend starting with a MobileNet architecture, but you can’t quite use the pre-packaged MobileNet model from PyTorch out-of-the-box. There’s a bit of extra code to write, in which you have to swap out layers to ensure compatibility.

- Model input and output formatting. Once you have a trained model, the SnapML docs make it seem pretty easy to use that model in a lens. They have a drag-and-drop interface and tools to adjust the inputs (e.g. stretching/scaling the camera feed, applying basic pre-processing) and outputs. But in practice, this was more difficult than I’d imagined. We’ll cover why in another blog post soon.

What’s Next

All-told, SnapML is an incredible leap forward for mobile machine learning, and edge AI more broadly. V1 is impressive and promising, and the areas for improvement we explored above seem more like the growing pains of any ambitious piece of software’s initial release, and less like a major roadblock.

In addition to this overview, we’ve taken a closer look and done some hands-on work with the new framework as part of our continuing series. You can find these resources below:

Comments 0 Responses