WWDC20

WWDC20 has dropped a ton of great updates for developers and users. Noticeable throughout the Keynote and Platforms State of the Union addresses were mentions of Core ML models powering some incredible first-party features, such as the sleep training capabilities. ML continues to play a key role in Apple’s vision for the future.

Core ML has been the framework that lets developers take their ideas to the next level. First, it allows developers to either create models (primarily through Create ML, if working within Apple’s ecosystem) or convert models from third-party frameworks into Core ML format.

Second, it provides an API that allows developers to easily access their models in their code to create some amazing experiences. And, lastly, it provides (some) models access to the Neural Engine inside the A-Series chips, which are optimally-engineered to run ML models.

Addressing Core ML’s Learning Disabilities

While Core ML and the Neural Engine are certainly great investments by Apple that benefit development on their platforms, there were some roadblocks in terms of evolving app models once they were out in the store.

At first, it pigeonholed evolution by forcing developers to release updated versions of their apps with any updated models they wished to deploy. This remains the case for models that require intensive training beyond the scope of a mobile/portable device.

Last year at WWDC19, Core ML 3 provided developers a way to expand model training through on-device training. While this certainly was welcomed, it was still not an ideal. It required accounting for any edge cases that users may introduce that could possibly skew the model. And again, if the model required significant horsepower to train, devices simply don’t make the cut.

There was the possibility of using another ML framework that could reference updatable models through downloads, but this means not being able to take advantage of the Neural Engine, possibly causing a performance bottleneck.

Introducing Core ML Model Deployment

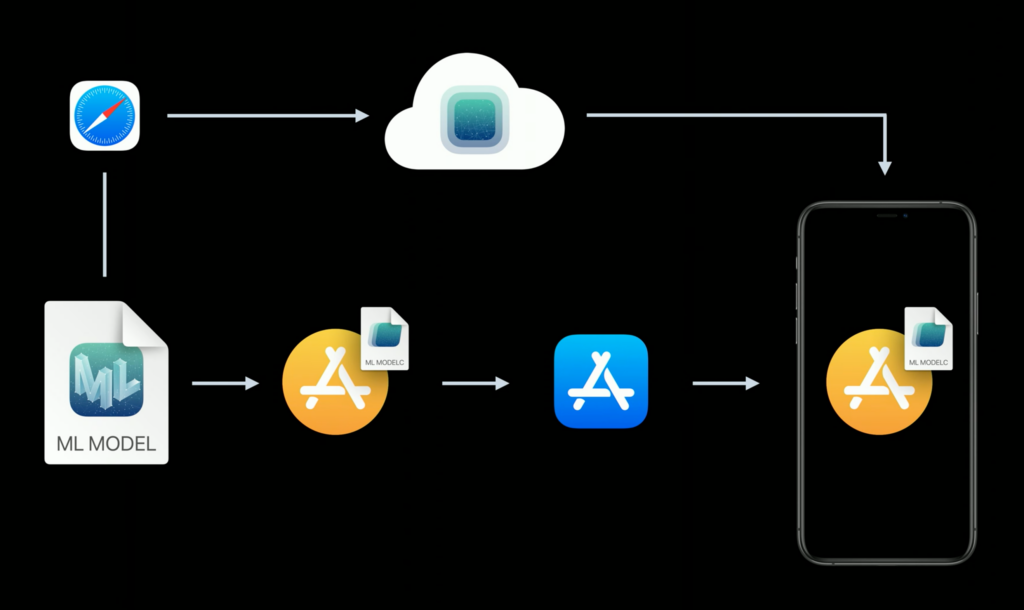

With the introduction of Core ML Model Deployment, we finally have a way to update models in apps without the need to sacrifice the Neural Engine or release new versions of our apps.

By adopting the Deployment API alongside your Core ML code, you can enable your app to receive model updates seamlessly through the Deployment service.

Model Collections

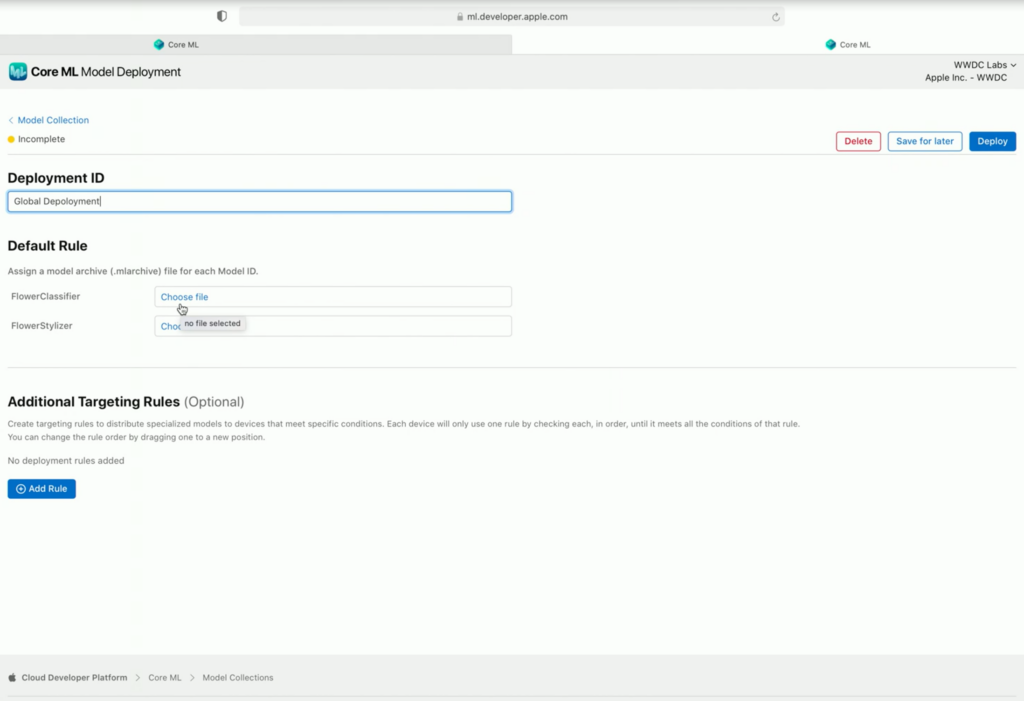

The service employs Model Collections for developers to create, and apps to link with for model deployment. This means that the service allows for one or more models to be maintained and deployed collectively.

Targeting Rules

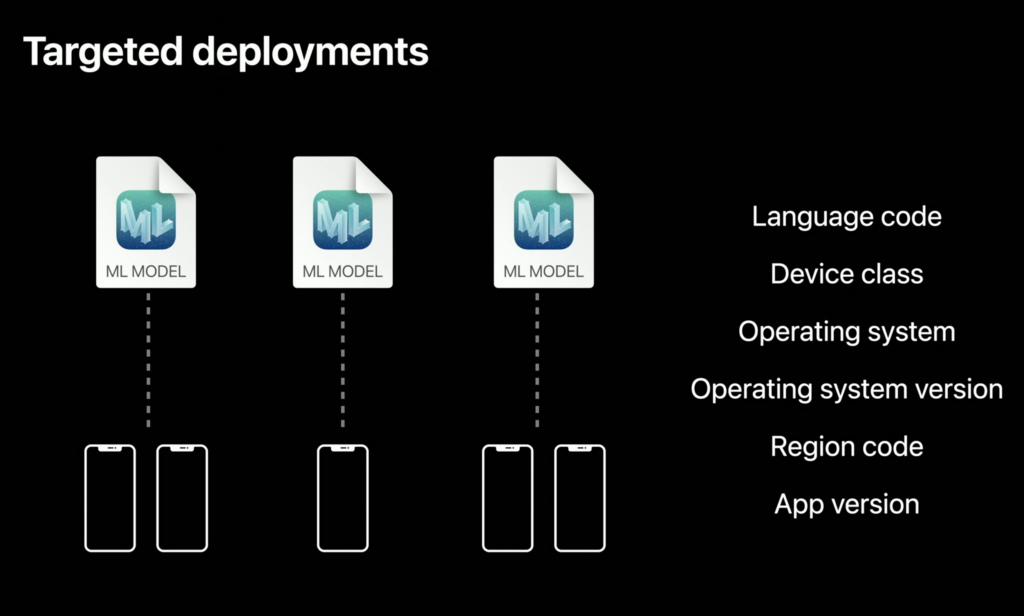

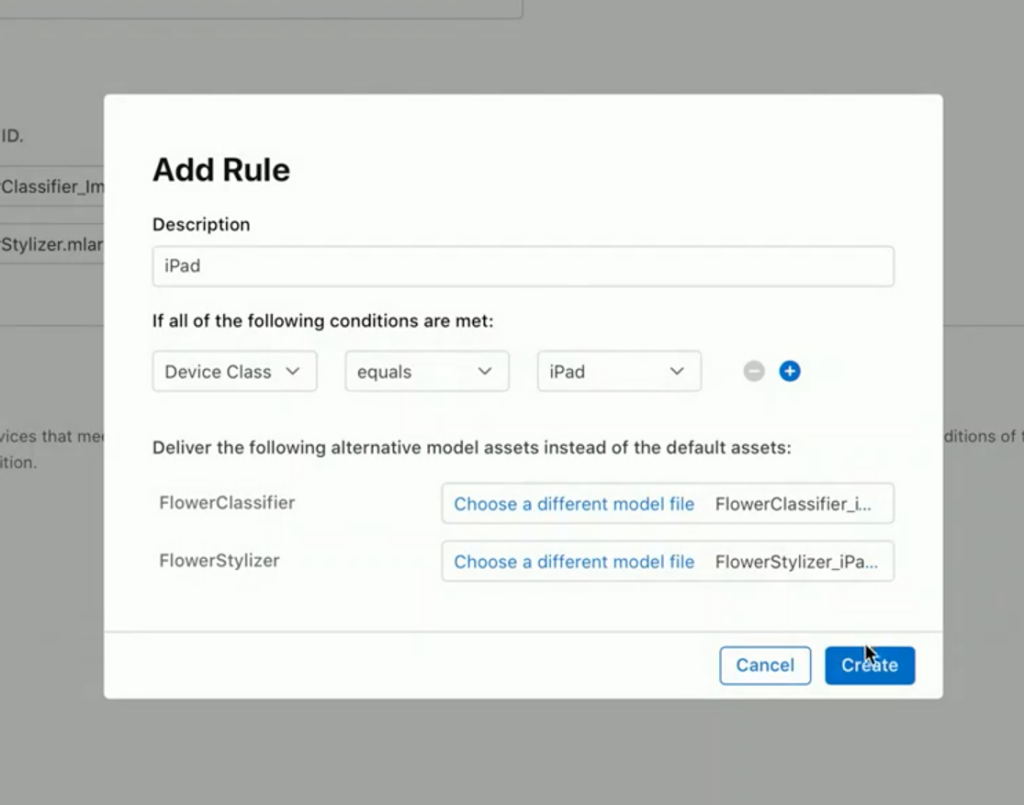

Model Deployment also allows developers to attach additional targeting rules to their Model Collections. As shown in the screenshot above, the options range across 6 categories, allowing you to deploy models based on environments and parameters that would allow optimal experiences.

The screenshot above (taken from the WWDC20 Session) demonstrates deploying unique models specifically for iPads, which differ from the models deployed for all other devices in the Model Collection. The use case given in the demonstration was to make a model that better identified flowers based on the iPad’s camera qualities, as compared to iPhone cameras.

Considerations

While Model Deployment is a big leap forward for ML evolution in apps, there are still some things to consider.

First, since the whole idea is to avoid having to release a new version of your app, it’s important then that your app’s code be written to accept model updates. Likewise, your model should be trained to produce expected inputs/outputs for your app to continue using it properly. This can be accomplished by running the model in your app locally during development, and then making your model deployable through Xcode 12.

Second, you may want to consider how your model might affect any stored data your user has compiled on their app and how new model results may affect them.

Lastly, you must take into account that, while your Model Collection may be deployed, it does not mean every user immediately receives it. To quote Anil Katti from the WWDC20 session:

There is also the case where a user may be disconnected from the internet, either coincidentally during that device determined period or for an extended period of time. We have to assume, then, that any expected changes to core user experience could be significantly delayed.

One scenario could be that developers must be careful with models that expect to account for specific timings/dates. Another scenario could be in how analytics and usage data is collected and handled. If developer want to use that data to train updated versions of models, it could be important to sort through data collected by Model Collection version.

Conclusion: Start Planning Your ML Evolution Deployment Plan

Model Deployment is a great step forward for ML development on iOS and other Apple operating systems. It’s exciting to see Apple really invest so much into ML and pave the way for great innovations for developer apps—as well as their own.

The next step to take, then, is to begin planning how your apps and models could benefit from the service. Could you offer regularly updated models to your users? Could you ingest real-world data to train your models in new ways that then could be included centrally and distributed globally through simple means? I’m excited to see how developers utilize this service in their own apps and how the service itself will evolve over time.

Comments 0 Responses