Today, we’re excited to launch Fritz Hair Segmentation, giving developers and users the ability to alter their hair with different colors, designs, or images.

Try it out for yourself on Android. You can download our demo app on the Google Play Store to play around with hair coloring.

What is Hair Segmentation?

Hair segmentation, an extension of image segmentation, is a computer vision task that generates a pixel-level mask of a user’s hair within images and videos (live, recorded, or downloaded; from front- or rear-facing cameras).

This feature brings a fun and engaging tool to photo and video editing apps — your users can change up their look for social media, try on a custom hair dye color, trick their friends into thinking they got a purple streak, or support their favorite sports team by stylizing their hair.

With Fritz AI, any developer can easily add this feature to their apps. In this post, we’ll show you how.

Set up your Fritz AI account and initialize the SDK

First, you’ll need a Fritz AI account if you don’t already have one. Sign up here and follow these instructions to initialize and configure our SDK for your app.

If you don’t have an app but want to get started quickly, I’d recommend cloning our camera template app.

To follow along, import CameraBoilerplateApp as a new project in Android Studio and then add the relevant code throughout this tutorial in the MainActivity.

Add the Hair Segmentation model via Gradle

In your app/build.gradle file, you can include the dependency with the following:

This includes the hair segmentation model in the app. Under the hood, we use TensorFlow Lite as our mobile machine learning framework. In order to make sure that the model isn’t compressed when the APK is built, you’ll need to add the following in the same build file under the android option.

Create a Segmentation Predictor with a Hair Segmentation model

To run segmentation on an image, we’ve created a Predictor class that simplifies all the pre- and post-processing for running a model in your app. Create a new predictor with the following:

// Initialize the model included with the app

SegmentOnDeviceModel onDeviceModel = new HairSegmentationOnDeviceModelFast();

// Create the predictor with the Hair Segmentation model.

FritzVisionSegmentPredictor predictor = FritzVision.ImageSegmentation.getPredictor(onDeviceModel);

Run prediction on an image to detect hair

Images can come from a camera, a photo roll, or live video.

In the code below, we convert an android.media.Image object (YUV_420_888 format) into a FritzVisionImage object to prepare it for prediction. This is usually the case when reading from a live camera capture session.

// Determine how to rotate the image from the camera used.

ImageRotation rotation = FritzVisionOrientation.getImageRotationFromCamera(this, cameraId);

// Create a FritzVisionImage object from android.media.Image

FritzVisionImage visionImage = FritzVisionImage.fromMediaImage(image, rotation);You may also convert a Bitmap to a FritzVisionImage.

After you’ve create a FritzVisionImage object, call predictor.predict.

// Run the image through the model to identify pixels representing hair.

FritzVisionSegmentResult segmentResult = predictor.predict(visionImage);This will return a segmentResult that you can use to display the hair mask. For more details on the different access methods, take a look at the official documentation.

Blend the mask onto the original image

Now that we have the result from the model, let’s extract the mask and blend it with the pixels on the original image.

First, pick one of 3 different blend modes:

// Soft Light Blend

BlendMode blendMode = BlendMode.SOFT_LIGHT;

// Color Blend

BlendMode blendMode = BlendMode.COLOR;

// Hue Blend

BlendMode blendMode = BlendMode.HUE;Next, let’s extract the mask for which we detected hair in the image. The Segmentation Predictor has a method called buildSingleClassMask that returns an alpha mask of the classified pixels.

Finally, let’s blend maskBitmap with the original image.

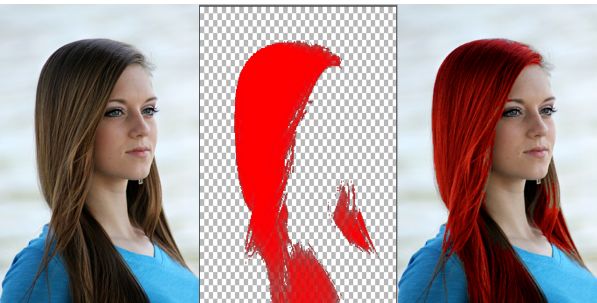

Here’s the final result of the blendedBitmap:

Check out our GitHub repo for the finished implementation.

With Hair Segmentation, developers are able to create new “try on” experiences without any hassle (or hair dye). Simply add a couple of lines of code to create deeply engaging features that help distinguish your Android app from the rest.

Comments 0 Responses