Developers using Fritz AI can now add tags and metadata to on-device machine learning models. Models can be queried by tags and loaded dynamically via the iOS and Android SDKs, giving you more control over distribution and usage.

Deliver models to users based on hardware, location, software environment, or any other attribute.

A practical example

Imagine we’re building an app that uses real-time image labeling to identify content in photos. We train a neural network using the default configuration of MobileNet V2 and test it on a few devices. iPhone XS, with its A12 Bionic processor, can run machine learning models up to 9X faster than iPhone X and up to 50X faster than iPhone 6. Our app runs in real-time on the latest flagship, but lag is terrible for older devices. What should we do?

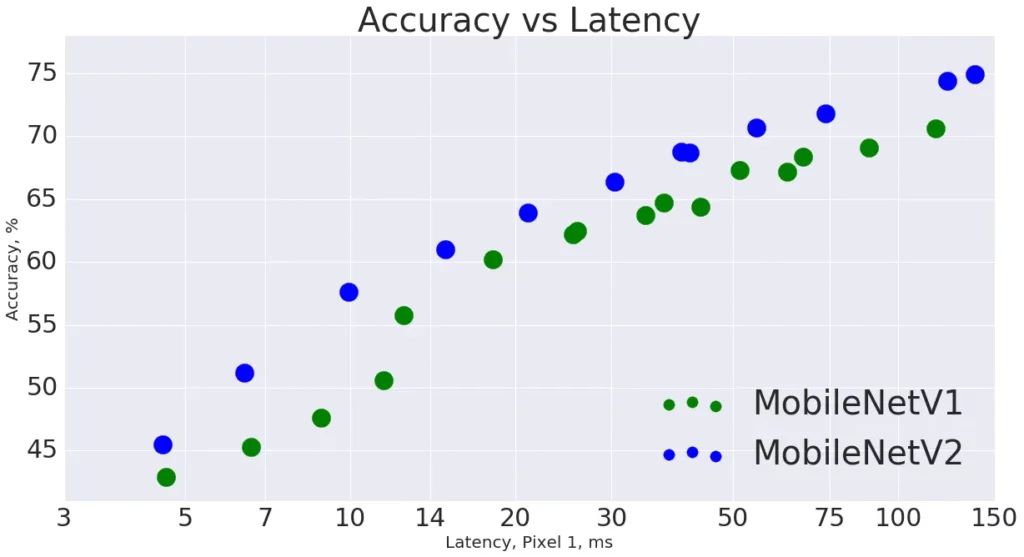

The MobileNet architecture provides a handy parameter, alpha, that controls how many weights appear in the model. We can decrease alpha from its default value of 1.0 and create smaller models that run much faster at the cost of only a few points of accuracy. The graph below shows this tradeoff.

To provide the best experience regardless of device, we’ll ship different versions of our model to different devices based on the hardware. Users with an iPhone 7 or older will get the smallest version of the model, iPhone X and XS will get the largest version, and the rest will get an intermediate version.

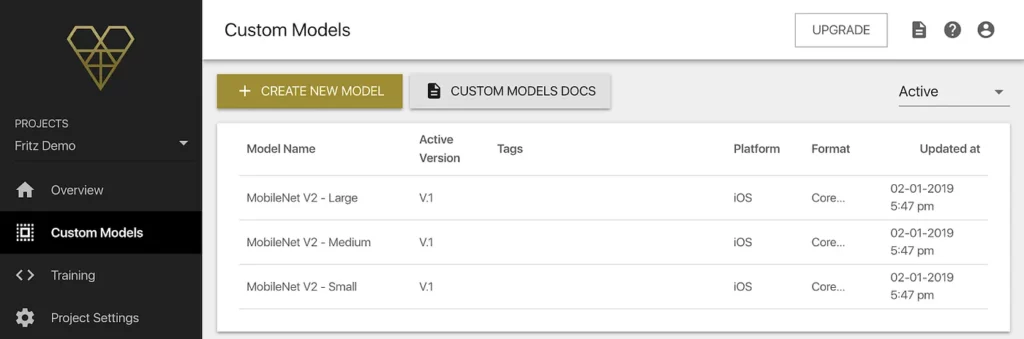

Step 1: Upload our custom models

Step 2: Add tags on the model from the webapp

Step 3: Fetch the appropriate model inside our app

First, we need to identify the type of device running the app and map it to the proper model. The machine identifier of the device will be a string such as iPhone10,1 (iPhone X) or iPhone7,1 (iPhone 6). We can then extract the major version number (e.g. 10 or 7 in the previous examples) and map them to a model tag.

private var machineIdentifier: String {

// Returns a machine identifier string. E.g. iPhone10,3 or iPhone7,1

// A full list of machine identifiers can be found here:

// https://gist.github.com/adamawolf/3048717

if let simulatorModelIdentifier = ProcessInfo().environment["SIMULATOR_MODEL_IDENTIFIER"] { return simulatorModelIdentifier }

var systemInfo = utsname()

uname(&systemInfo)

return withUnsafeMutablePointer(to: &systemInfo.machine) {

ptr in String(cString: UnsafeRawPointer(ptr).assumingMemoryBound(to: CChar.self))

}

}

func getTagFromMachineIdentifier(machineIdentifier: String) -> String {

// Remove all non-number values

var stringArray = machineIdentifier.components(separatedBy: CharacterSet.decimalDigits.inverted)

// Filter non-number values

stringArray = stringArray.filter { $0 != "" }

let majorVersion = Int(stringArray[0])!

// iPhone 8, X, and XS get a large models.

if majorVersion >= 10 { return "large"}

// iPhone 7 and less get small models.

if majorVersion <= 7 { return "small"}

// All other devices get the medium model.

return "medium"

}

// Usage:

// getTagFromMachineIdentifier(machineIdentifier: "iPhone10,2")

// >> "large"

// getTagFromMachineIdentifier(machineIdentifier: "iPhone6,1")

// >> "small"Next, use the SDK to query for models matching these tags.

import Fritz

// Fetch all of the models matching the tag

// we chose based on the machine identifier

let tagManager = ModelTagManager(tags: [tag])

// Loop through all of the models returned and download each model.

// In this case, we should only have a single model for each tag.

var allModels: [FritzMLModel] = []

tagManager.fetchManagedModelsForTags { managedModels, error in

guard let fritzManagedModels = managedModels, error == nil else {

return

}

for managedModel in fritzManagedModels {

// Verify the model we get back has the correct tags.

print("Model ID: " + managedModel.activeModelConfig.identifier + " Tags: " + managedModel.activeModelConfig.tags![0])

managedModel.fetchModel { downloadedModel, error in

guard let fritzMLModel = downloadedModel, error == nil else {

return

}

allModels.append(fritzMLModel)

}

}

}Without tags, inference ran in 5ms on iPhone XS and 100ms on iPhone 6. Thanks to model tagging, we’ve reduced the inference time on iPhone 6 to just 25ms. Now users get a smooth experience regardless of device. As always, you can track model performance metrics on the Fritz AI dashboard and ship updated models over-the-air as you make improvements.

Uses of tags and metadata

- Target specific hardware: As we showed above, tags can be used to deliver specific models to specific devices based on hardware performance and availability.

- Access control: Use tags and metadata to control access to models or groups of models that users unlock. E.g. in-app purchases.

- Localization: Provide users with models that have been localized to geographies or languages. E.g. text classifiers trained on corpuses of a specific language.

- Resource management: Map models to other resources using metadata. E.g. links to thumbnail previews for a given model.

Get started today

Want more control over your on-device machine learning models? Get started with a Fritz AI account today. Available on both iOS and Android.

Discuss this post on Hacker News.

Comments 0 Responses