Lately, machines have been proving themselves as good as or even superior to people with certain tasks: they are already better in Go, Chess, and even Dota 2. Algorithms compose music and write poetry. Scientists and entrepreneurs estimate that artificial intelligence will greatly surpass human beings in the future.

A large part of what makes us human is our humor, and even though it’s believed that only humans can crack jokes, many scientists, engineers, and even regular people wonder: is it possible to teach AI how to joke?

Gentleminds, a developer of machine learning technology and computer vision, partnered with FunCorp to create a funny caption generator using the iFunny meme base.

Compared to composing music, it’s hard to describe what makes us laugh. Sometimes, we can hardly explain what exactly amuses us and why. Many researchers believe that a sense of humor is one of the last frontiers artificial intelligence needs to conquer to truly match human beings. Research shows us that a sense of humor started developing long ago in people in the course of mate selection.

This can be explained by the direct correlation between intellect and a sense of humor. Even today, someone’s sense of humor can show us the level of their intellect. The ability to joke requires such difficult skills as language proficiency and a keen range of vision. In fact, a good command of language is especially important for certain individual pools of humor (British, for example) based primarily on word play. All in all, teaching artificial intelligence how to joke is no easy task.

Researchers from around the world are trying to teach artificial intelligence how to make jokes:

- Janelle Shane created a connectionist network that writes knock-knock jokes:

- Researchers from the University of Edinburg developed a successful method of teaching computer “I like my X like I like my Y, Z.” jokes:

- Scientists from the University of Washington created a system that comes up with NSFW “that’s what she said” (TWSS) jokes

- Researchers from Microsoft have also tried to teach a computer how to make jokes by using the cartoon contest from The New Yorker as material for teaching the system.

As you can see from these numerous examples, teaching artificial intelligence how to joke is a challenging task. It’s further complicated because it has no quality metric. Everyone takes jokes in a different way, and the formula for “making a funny joke” is at its core ambiguous.

In our experiment, we decided to simplify this task and add context in the form of a picture. The system was designed to write a funny caption for these pictures (i.e. memes). However, the project was made more complex because an additional blank space was added to each picture along with a mechanism to match the text to the picture.

We worked off the iFunny base with more than 17,000 memes. We split them into two parts: picture and caption. We only used memes with text above the picture:

We tried two approaches:

- Generating captions (in one case with the Markov model, and the other with a recurrent neural network)

- Selecting the most suitable caption for an image from the database. This was implemented on the basis of the visual component. In the first approach, we were looking for a caption inside the clusters built on the basis of memes. In the Word2VisualVec approach, which in this article we refer to as “membedding,” we tried to transfer images and text into a single vector space, where the relevant caption would be close to the image.

We describe our approaches in more detail below.

Analysis of the database

Any research in machine learning always begins with data analysis. The first step was to understand what kind of images the database contains. Using a classification network trained on V4 of the Open Images Dataset (link below), we obtained for each image a vector with ratings for each category and made a clustering based on these vectors.

We identified five major groups:

1) People

2) Food

3) Animals

4) Cars

5) Animation

The clustering results were used later in the construction of the basic solution.

To assess the quality of the experiments, we put together a test base composed of 50 images that covered the main categories, and then we evaluated the quality by using an “expert” council to determine if they were funny or not.

Search by cluster

This approach was based on determining the cluster closest to the picture when the caption was chosen at random. The image descriptor was defined using a categorization neural network. We used the five clusters mentioned above (people, food, animals, animation, cars) and a k-means algorithm.

Some result examples are shown below. Since the clusters were quite large and their content varied, the ratio of phrases that fit and did not fit for the picture was 1 to 5. It may seem like this is because there were five clusters, but in fact, even if the cluster was determined correctly, there were still a large number of incorrect captions inside it.

Me buying food vs Me buying textbooks

Boss: It says here that you love science

Guy: Ya, I love to experiment

Boss: What do you experiment with?

Guy: Mostly just drugs and alcohol

Cop: Did you get a good look at the suspect?

Guy: Yes

Cop: Was it a man or a woman?

Guy: I don’t know I didn’t ask them

hillary: why didn‘t you tell me they were

reopening the investigation?

obama: we emailed you

«Ma’am do you have a permit for this business»

Girl: does it look like I’m selling donuts?!

A swarm of 20,000 bees once followed a car for two days because their queen was trapped inside the car.

I found the guy in those math problems with all the watermelons…

So that’s what those orange cones were for

Search by visual similarity

Our attempt at clustering led to speculation that we should try to narrow down the search field. Also, if the images in clusters were indeed diverse, then searching for pictures closest to the first one could deliver better results.

In this experiment, we continued to use a neural network trained on 7,880 categories. In the first stage, we fed the network all the images and saved the top five in each category, as well as the values from the penultimate layer (carrying both visual information and information about categories).

While searching for a caption for each picture, we used the top 5 categories and searched the database for images with the most similar categories. Then we selected the closest ten, and from this set chose a caption randomly. We also held an experiment where we searched among the penultimate layer of the network using values.

The results of both methods were similar. On average, there were 1–2 successful captions for every 5 fails. This can be explained by the fact that in the captions for visually similar photos of people, a large role is played by the people’s emotions and the nuances of the situation. Here are some examples.

Me buying food vs Me buying textbooks

Don’t Act Like You Know

Politics If You Don’t

Know Who This Is Q

when u tell a joke and no one else laughs

When good looking people have no sense of humour

#whatawaste

Assholes, meet your king.

Free my boy he didn’t do nothing

I guess they didn’t read the license

plate

When someone starts telling you how

to drive from the backseat

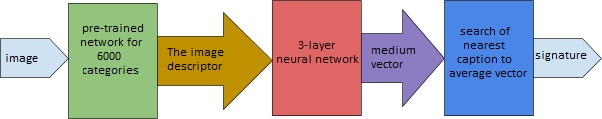

We call this Membedding, or finding the most appropriate caption by transferring the image descriptor into the vector space of text descriptors.

The purpose of Membedding is to find the space in which the vectors that interest us are “close.” Let’s try the approach the Word2VisualVec paper.

We have pictures and captions for them. We want to find text that is “close” to the image. In order to address this issue, we need to:

1) Construct a vector describing the image

2) Construct a vector describing the text

3) Construct a vector space with the desired properties (a text vector “close” to the image vector)

To build a vector describing an image, we used a neural network pre-trained for 6,000+ classes:

As a vector, we will take the output from the penultimate layer of this network with a dimensionality of 2,048.

We adopted two approaches to vectorize the text: Bag-Of-Words and Word2Vec. Word2Vec was trained using the words from all the image captions. The caption was transformed as follows: each word of the text was transferred into a vector using Word2Vec, and then the common vector was found according to the arithmetic mean, or average vector.

Thus, an image was fed as input into the neural network, and the average vector was predicted as the output. To “embed” text vectors into the vector space of image descriptors, we used a three-layer, fully connected neural network.

First, we calculated the vectors for the base of captions with the help of a trained neural network.

Then, using a convolutional neural network, we obtained a descriptor to search for the image caption and look for the vector of captions closest in cosine distance, choosing the closest or a random one from n-number of the closest.

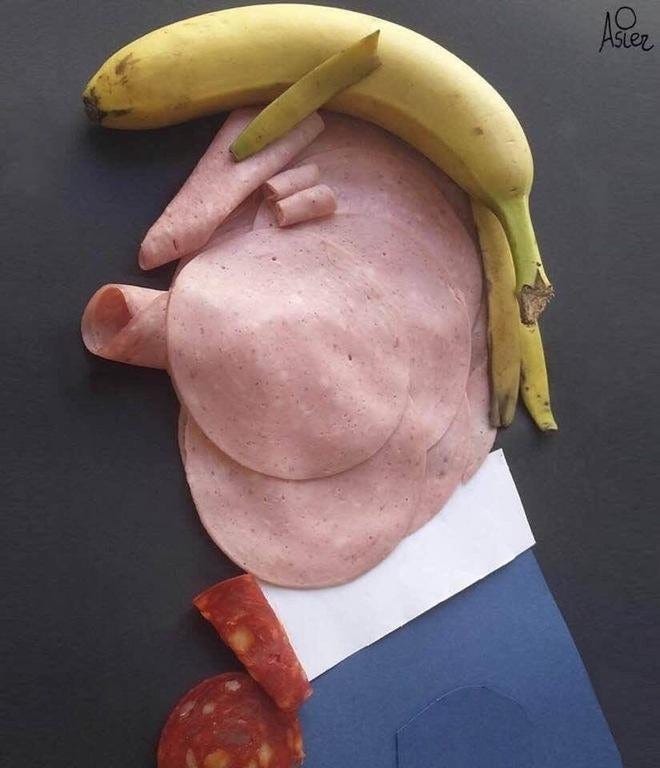

Good examples

Bad examples

To build a vector using the Bag-of-Words method that describes the text, we use the following method: calculate the frequency of three-letter combinations in the captions, discard all that occur less than three times, and compose a dictionary of the remaining combinations.

To convert text into a vector, we calculate the number of occurrences of three-letter combinations from the dictionary in the text. The vector we obtain has a dimensionality of 5,322.

Result (5 “closest” captions):

When she sends selfies but you wanted a better America

when ur enjoying the warm

weather in december but deep

down u know it’s because of

global warming

The stress of not winning an Oscar is

beginning to take its toll on Leo

Dear God, please make our the next

American president as strong as this

yellow button. Amen.

My laptop is set up to take a picture after 3

incorrect password attempts.

My cat isn’t thrilled with his new bird saving bib…

jokebud

This cat looks just like Kylo Ren from Star Wars

Ugly guys winning bruh QC

Single mom dresses as dad so her

son wouldn’t miss «Donuts With Dad»

day at school

Steak man gotta relax….

My friend went to prom with two dates.

It didn’t go as planned…

For similar images, the captions are almost the same:

My girlfriend can take beautiful photos

of our cat. I seemingly can’t…

My laptop is set up to take a picture after 3

incorrect password attempts.

This cat looks just like Kylo Ren from Star Wars

As a result, the ratio of successful to unsuccessful outcomes turned out to be approximately 1 to 10. This fact can be explained by the small quantity of universal captions, as well as the presence in the training sample of a large percentage of memes with captions that only make sense if the user has some prior knowledge.

Generation of captions: the WordRNN approach.

The basis of this method is a two-layer recurrent neural network, each layer of which is an LSTM. The main property of such networks is their ability to extrapolate the time series, in which the next value depends on the previous one. The captions we are dealing with are such time series.

This network was trained to predict every next word in the text. The entire set of captions was used as the training sample. We assumed the network would be able to learn to generate meaningful (in some way) or at least ridiculous captions based on their absurdity.

Only the first word was fed, the rest was generated.

Here’s what we got:

Trump: trump cats” got friends almost scared about the only thing in the universe

Obama: obama LAUGHING dropping FAVORITE 4rd FAVORITE 4rd long

Asian: asian RR I I look looks Me: much before you think u got gray candy technology that wore it

Cat: cat That when you only giving but the waiter said *hears feeling a cake with his bun

Car: Car Crispy “Emma: please” BUS 89% Starter be disappointed my mom being this out of pizza?

Teacher: teacher it’ll and and felt not to get out because ppl keep not like: he failed so sweet my girl: has

Contrary to our expectations, the resulting captions were nothing but a jumble of words. Although in some cases the sentence structures were rather well-imitated and some parts had meaning.

Generating captions using Markov Chains

Markov chains are a popular approach to natural language modeling. To build a Markov chain, the body of the text is divided into tokens (i.e. words). Groups of tokens are assigned as states, and the probabilities of transitions between them and the next word in the body of the text are calculated. When generating, the next word is selected by sampling from the allocation of the probability obtained from an analysis of the corpus.

This library was used for implementation, and captions not including dialogues were used as the training base.

Each new line = a new caption.

Result (state — 2 words):

when your homies told you m 90 to

dwaynejohnson& the rock are twins. like if they own the turtle has a good can of beer & a patriotic flag tan.

this guy shows up on you, but you tryna get the joke your parents vs me as much as accidentally getting ready to go to work in 5 mins to figure out if your party isn’t this lit. please don’t be sewing supplies…

justin hanging with his legos

when ya mom finds the report card in her mind is smoking 9h and calling

texting a girl that can save meek

Result (state — 3 words):

when you graduate but you don’t like asks for the answers for the homework

when u ugly so u gotta get creative

my dog is gonna die one day vs when you sit down and your thighs do the thing

when you hear a christmas song on the radio but it’s ending

your girl goes out and you actually cleaned like she asked you to

chuck voted blair for prom queen 150 times and you decide to start making healthier choices.

when you think you finished washing the dishes and turn around and there are more on the stove

when you see the same memes so many times it asks for your passcode trust nobody not even yourself

The text is more meaningful in the state with three words than with two, but the quality is not up to par for direct use. This approach can probably be used to generate captions followed by human moderation.

Instead of a conclusion

Teaching an algorithm to write jokes is at once an incredibly difficult and fascinating task. But reaching this goal will make intellectual assistants more “human.”

For example, you might imagine the robot from “Interstellar,” whose humor level would be regulated, and whose jokes would be more unpredictable than in the current versions of digital assistants.

In light of all current experiments, we can make the following general conclusions:

1) The approach involving the generation of captions requires highly-complex and time-consuming operations with the body of text, training method, and architecture of the model. It also does not lend itself very well to predicting results.

2) More predictable in terms of results is the approach with the selection of a caption from an existing database. But this also presents certain difficulties:

- Memes that can only be understood with some information a priori. Such memes are difficult to separate out, and if they get into the base, the quality of the jokes will decrease.

- Memes where you need to understand what’s happening in the picture, i.e. the action or situation. Such memes also reduce the accuracy if we put these images into the database.

3) From an engineering perspective, it seems that at this stage the best solution is a careful selection of phrases by an editorial team in the most popular categories. These are selfies (because people usually check the system for themselves or photos of friends and acquaintances), celebrity pics (Trump, Putin, Kim Kardashian, etc.), pets, cars, food, and nature. You can also create a “miscellaneous” category and have jokes pre-prepared in the event that the system did not recognize what’s in the picture.

In general, today’s artificial intelligence isn’t capable of generating jokes (but to be fair, not all people are either). However, it’s quite capable of choosing the right one. So moving forward, we’ll be keeping our eye on this topic and continue making our own contributions!

Comments 0 Responses