In this article, I’ll show you how to build your own real-time object detection iOS app. Thanks to other people’s articles, you can easily train your own object recognition model using TensorFlow’s Object Detection API and integrate the trained model into your iOS app.

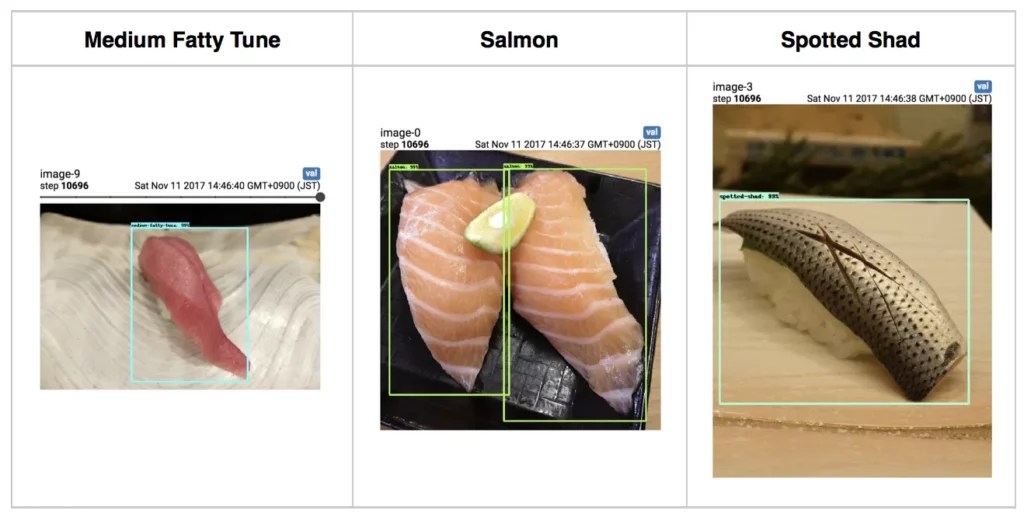

This is the completed version of my real-time sushi recognition iOS app:

In this article:

Motivation

When Google released its TensorFlow Object Detection API, I was really excited and decided to build something using the API. I thought a real time object detection iOS (or Android) app would be awesome.

Of course, you can host a remote API that detects objects in a photo. But sometimes you don’t want to wait for the latency, and sometimes you don’t have reliable Internet access. When you want to know what objects are in front of you (and where) immediately, detecting objects on your camera’s screen could be useful.

Because everyone likes sushi (this is what my colleague told me 😋)! In all seriousness, it would be awesome if an app could enable people to access expert knowledge instantaneously.

In my case, types of sushi. Some sushi look similar, and some look totally different. These subtle similarities and differences would represent a good test case to measure the capability of a real-time object detector.

Train your own object detection model

After reading a few blog posts, I was able to train my own object detection model. I won’t explain the details of this step in this article. But please check out my GitHub repository and/or the official documentation for more details.

Export trained model

After finishing training, you’ll need to export your model. Follow this document for more details. In the output directory, you’ll find frozen_inference_graph.pb, which will be used in iOS later.

Build an iOS app using the trained model

Even though the accuracy of the trained model wasn’t perfect, I wanted to run it on mobile and see how it worked. Next, I needed to figure out how to integrate the TensorFlow model into an iOS app.

I found this repository that demonstrates an object detection app on iOS using TensorFlow and pre-trained dataset models. I’ll explain the steps I took to integrate my custom trained model into the iOS app project.

Build TensorFlow libraries for iOS

I had to first build TensorFlow libraries for iOS on my machine. This step took few hours.

After finishing the build step, I changed tensorflow.xconfig where the TensorFlow path is configured.

Integrate your own model

You can see all changes I made in my GitHub repo.

Frozen model

After removing existing models from the project, I placed the frozen model I created before and added it to my iOS project as a resource named op_inference_graph.pb. You can also change the model name by changing the code.

Label map

I also had to add a label map, which was used in the training step. But I also added display_name. And I changed the label map name in the code.

Result

After the above steps, I was able to build an iOS app successfully.

Video:

Speed:

Predictions are done in 0.1 sec on average.

Conclusion

I hope you enjoyed this post and now can build your own real-time object detection app on iOS. In this post, I didn’t focus on app size or prediction speeds. As next steps, I want to try to use TensorFlow Lite to reduce app size and speed up inferences. Google announced that TensorFlow Lite supports iOS’s Core ML, allowing us to convert TensorFlow models to Core ML. I’m looking forward to reporting those results later.

Thank you for reading this post. Please give me a 👏 if you enjoyed it.

Discuss this post on Hacker News and Reddit

Comments 0 Responses