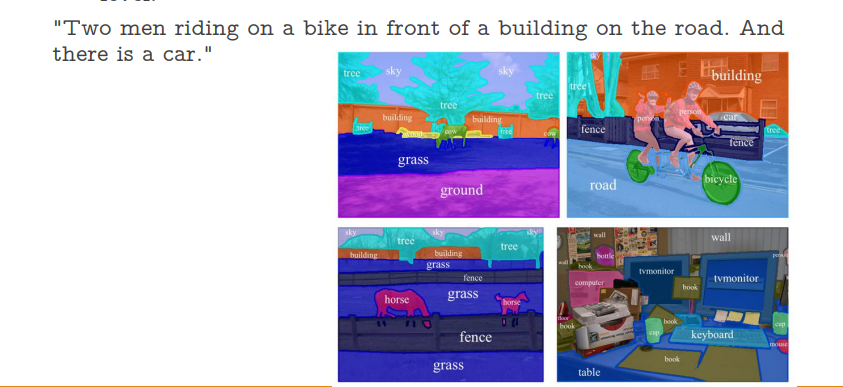

Semantic segmentation refers to the process of linking each pixel in an image to a class label. These labels could include a person, car, flower, piece of furniture, etc., just to mention a few.

We can think of semantic segmentation as image classification at a pixel level. For example, in an image that has many cars, segmentation will label all the objects as car objects. However, a separate class of models known as instance segmentation is able to label the separate instances where an object appears in an image. This kind of segmentation can be very useful in applications that are used to count the number of objects, such as counting the amount of foot traffic in a mall.

Some of its primary applications are in autonomous vehicles, human-computer interaction, robotics, and photo editing/creativity tools. For example, semantic segmentation is very crucial in self-driving cars and robotics because it is important for the models to understand the context in the environment in which they’re operating.

We’ll now look at a number of research papers on covering state-of-the-art approaches to building semantic segmentation models, namely:

- Weakly- and Semi-Supervised Learning of a Deep Convolutional Network for Semantic Image Segmentation

- Fully Convolutional Networks for Semantic Segmentation

- U-Net: Convolutional Networks for Biomedical Image Segmentation

- The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation

- Multi-Scale Context Aggregation by Dilated Convolutions

- DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs

- Rethinking Atrous Convolution for Semantic Image Segmentation

- Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

- FastFCN: Rethinking Dilated Convolution in the Backbone for Semantic Segmentation

- Improving Semantic Segmentation via Video Propagation and Label Relaxation

- Gated-SCNN: Gated Shape CNNs for Semantic Segmentation

Weakly- and Semi-Supervised Learning of a Deep Convolutional Network for Semantic Image Segmentation (ICCV, 2015)

This paper proposes a solution to the challenge of dealing with weakly-labeled data in deep convolutional neural networks (CNNs), as well as a combination of data that’s well-labeled and data that’s not properly labeled.

In the paper, a combination of deep CNNs with a fully-connected conditional random field is applied.

On the PASCAL VOC segmentation benchmark, this model gives a mean intersection-over-union (IOU) score above 70%. One of the major challenges faced with this kind of model is that it requires images that are annotated at the pixel level during training.

The main contributions of this paper are:

- Introduction of Expectation-Maximization algorithms for bounding box or image-level training that can be applied to both weakly-supervised and semi-supervised settings.

- Proves that combining weak and strong annotations improves performance. The writers of this paper reach 73.9% IOU performance on PASCAL VOC 2012 after merging annotations from the MS-COCO datasets and PASCAL datasets.

- Proves that their approach achieves higher performance by merging a small number of pixel-level annotated images and a large number of bounding-box or image-level annotated images.

Fully Convolutional Networks for Semantic Segmentation (PAMI, 2016)

The model proposed in this paper achieves a performance of 67.2% mean IU on PASCAL VOC 2012.

Fully-connected networks take an image of any size and generate an output of the corresponding spatial dimensions. In this model, ILSVRC classifiers are cast into fully-connected networks and augmented for dense prediction using pixel-wise loss and in-network up-sampling. Training for segmentation is then done by fine-tuning. Fine-tuning is done by back-propagation on the entire network.

U-Net: Convolutional Networks for Biomedical Image Segmentation (MICCAI, 2015)

In biomedical image processing, it’s very crucial to get a class label for every cell in the image. The biggest challenge in biomedical tasks is that thousands of images for training are not easily accessible.

This paper builds upon the fully convolutional layer and modifies it to work on a few training images and yield more precise segmentation.

Since very little training data is available, this model uses data augmentation by applying elastic deformations on the available data. As illustrated in figure 1 above, the network architecture is made up of a contracting path on the left and an expansive path on the right.

The contracting path is made up of two 3×3 convolutions. Each of the convolutions is followed by a rectified linear unit and a 2×2 max pooling operation for downsampling. Every downsampling stage doubles the number of feature channels. The expansive path steps include an upsampling of the feature channels. This is followed by 2×2 up-convolution that halves the number of feature channels. The final layer is a 1×1 convolution that is used to map the component feature vectors to the required number of classes.

In this model, training is done using the input images, their segmentation maps, and a stochastic gradient descent implementation of Caffe. Data augmentation is used to teach the network the required robustness and invariance when very little training data is used. This model achieved a mean intersection-over-union (IOU) score of 92% on one of the experiments.

The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation (2017)

The idea behind DenseNets is that having each layer connected to every layer in a feed-forward manner makes the network easier to train and more accurate.

The model’s architecture is built in dense blocks of downsampling and upsampling paths. The downsampling path has 2 Transitions Down (TD) while the upsampling path has 2 Transitions Up (TU). The circle and arrows represent connectivity patterns within the network.

The main contributions of this paper are:

- Extends the DenseNet architecture to fully convolutional networks for use in semantic segmentation.

- Proposes upsampling paths from dense networks that perform better than other upsampling paths.

- Proves that the network can produce state-of-the-art results on standard benchmarks.

This model achieves a global accuracy of 88% on the CamVid dataset.

Multi-Scale Context Aggregation by Dilated Convolutions (ICLR, 2016)

In this paper, a convolution network module that blends multi-scale context information without loss of resolution is developed. This module can then be plugged into existing architectures at any resolution. The module is based on dilated convolutions.

The module was tested on the Pascal VOC 2012 dataset. It proves that adding a context module to existing semantic segmentation architectures improves their accuracy.

The front-end module trained in experimentation achieves 69.8% mean IoU on the VOC-2012 validation set and 71.3% mean IoU on the test set. The prediction accuracy of this model on different objects is shown below

DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs (TPAMI, 2017)

In this paper the authors make the following contributions to the task of semantic segmentation with deep learning:

- Convolutions with upsampled filters for dense prediction tasks

- Atrous spatial pyramid pooling (ASPP) for segmenting objects at multiple scales

- Improving localization of object boundaries by using DCNNs.

The paper’s proposed DeepLab system achieves a 79.7% mIOU on the PASCAL VOC-2012 semantic image segmentation task.

The paper tackles the main challenges of using deep CNNs in semantic segmentation, which include:

- Reduced feature resolution caused by a repeated combination of max-pooling and downsampling.

- Existence of objects at multiple scales.

- Reduced localization accuracy caused by DCNN’s invariance since an object-centric classifier requires invariance to spatial transformations.

Atrous convolution is applied by either upsampling the filters by inserting zeros or sparsely sampling the input feature maps. The second method entails subsampling the input feature maps by a factor equal to the atrous convolution rate r, and deinterlacing it to produce r^2 reduced resolution maps, one for each of the r×r possible shifts. After this, a standard convolution is applied to the immediate feature maps, interlacing them with the image’s original resolution.

Rethinking Atrous Convolution for Semantic Image Segmentation (2017)

This paper addresses two challenges (mentioned previously) in using DCNNs for semantic segmentation; reduced feature resolution that occurs when consecutive pooling operations are applied and the existence of objects at multiple scales.

In order to address the first problem, the paper suggests the use of atrous convolution, also known as dilated convolution. It proposes solving the second problem using atrous convolution to enlarge the field of view and hence include multi-scale context.

The paper’s ‘DeepLabv3’ achieves a performance of 85.7% on the PASCAL VOC 2012 test set without DenseCRF post-processing.

Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation (ECCV, 2018)

This paper’s approach ‘DeepLabv3+’ achieves the test set performance of 89.0% and 82.1% without any post-processing on PASCAL VOC 2012 and Cityscapes datasets. This model is an extension of DeepLabv3 by adding a simple decoder module to refine the segmentation results.

The paper implements two types of neural networks that use a spatial pyramid pooling module for semantic segmentation. One captures contextual information by pooling features at different resolutions, while the other obtain sharp object boundaries.

FastFCN: Rethinking Dilated Convolution in the Backbone for Semantic Segmentation (2019)

This paper proposes a joint upsampling module named Joint Pyramid Upsampling (JPU) to replace the dilated convolutions that consume a lot of time and memory. It works by formulating the function of extracting high-resolution maps as a joint upsampling problem.

This method achieves a performance of mIoU of 53.13% on the Pascal Context dataset and runs 3 times faster.

The method implements a fully-connected network(FCN) as the backbone while applying JPU to upsample the low-resolution final feature maps, resulting in high-resolution feature maps. Replacing the dilated convolutions with the JPU does not result in any loss of performance.

Joint sampling uses low-resolution target images and high-resolution guidance images. It then generates high-resolution target images by transferring the structure and details of the guidance image.

Improving Semantic Segmentation via Video Propagation and Label Relaxation (CVPR, 2019)

This paper proposes a video-based method to scale the training set by synthesizing new training samples. This is aimed at improving the accuracy of semantic segmentation networks. It explores the ability of video prediction models to predict future frames in order to predict future labels.

The paper demonstrates that training segmentation networks on datasets from synthesized data lead to improved prediction accuracy. The methods proposed in this paper achieve mIoUs of 83.5% on Cityscapes and 82.9% on CamVid.

The paper proposes two ways of predicting future labels:

- Label Propagation (LP) creating new training samples by pairing a propagated label with the original future frame

- Joint image-label Propagation (JP) creating new training samples by pairing a propagated label with the corresponding propagated image

The paper has three main propositions; utilizing video prediction models to propagate labels to immediate neighbor frames, introducing joint image-label propagation to deal with the misalignment problem, and relaxing one-hot label training by maximizing the likelihood of the union of class probabilities along the boundary.

Gated-SCNN: Gated Shape CNNs for Semantic Segmentation (2019)

This paper is the newest kid on the semantic segmentation block. The authors proposes a two-stream CNN architecture. In this architecture, shape information is processed as a separate branch. This shape stream processes only boundary related information. This is enforced by the model’s Gated Convolution Layer (GCL) and local supervision.

This model outperforms the DeepLab-v3+ by 1.5 % on mIoU and 4% in F-boundary score. The model has been evaluated using the Cityscapes benchmark. On smaller and thinner objects, the model achieves an improvement of 7% on IoU.

The table below shows the performance of the Gated-SCNN in comparison to other models.

Conclusion

We should now be up to speed on some of the most common — and a couple of very recent — techniques for performing semantic segmentation in a variety of contexts.

The papers/abstracts mentioned and linked to above also contain links to their code implementations. We’d be happy to see the results you obtain after testing them.

Comments 0 Responses